I am new in machine learning. I am making a Streamlit app for multiclass classification using artificial neural network. My question is about the ANN model, not about the Streamlit. I know I can use MLPClassifier, but I want to build and train my own model. So, I used the following code to analyze the following data. -

-

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

from tensorflow.keras.callbacks import EarlyStopping

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.layers import Dropout

from sklearn.metrics import classification_report, confusion_matrix

from sklearn.metrics import plot_roc_curve, roc_auc_score, roc_curve

from sklearn.model_selection import cross_val_score, cross_validate

from sklearn.model_selection import GridSearchCV

df=pd.read_csv("./Churn_Modelling.csv")

#Drop Unwanted features

df.drop(columns=['Surname','RowNumber','CustomerId'],inplace=True)

df.head()

#Label Encoding of Categ features

df['Geography']=df['Geography'].map({'France':0,'Spain':1,'Germany':2})

df['Gender']=df['Gender'].map({'Male':0,'Female':1})

#Input & Output selection

X=df.drop('Exited',axis=1)

Y = df['Exited']

Y = df['Exited'].map({'yes':1, 'no':2, 'maybe':3})

#train test split

from sklearn.model_selection import train_test_split

X_train,X_test,Y_train,Y_test=train_test_split(X,Y,test_size=0.3,random_state=12,stratify=Y)

#scaling

from sklearn.preprocessing import StandardScaler

ss = StandardScaler()

X_train = ss.fit_transform(X_train)

Y_train = ss.fit_transform(Y_train)

X_test=ss.transform(X_test)

# build a model

#build ANN

model=Sequential()

model.add(Dense(units=30,activation='relu',input_shape=(X.shape[1],)))

model.add(Dropout(rate = 0.2))

model.add(Dense(units=18,activation='relu'))

model.add(Dropout(rate = 0.1))

model.add(Dense(units=1,activation='sigmoid'))

model.compile(optimizer = 'adam', loss = 'categorical_crossentropy', metrics = ['accuracy'])

#create callback : -

cb=EarlyStopping(

monitor="val_loss", #val_loss means testing error

min_delta=0.00001, #value of lambda

patience=15,

verbose=1,

mode="auto", #minimize loss #maximize accuracy

baseline=None,

restore_best_weights=False

)

trained_model=model.fit(X_train,Y_train,epochs=10,

validation_data=(X_test,Y_test),

callbacks=cb,

batch_size=10

)

model.evaluate(X_train,Y_train)

print("Training accuracy :",model.evaluate(X_train,Y_train)[1])

print("Training loss :",model.evaluate(X_train,Y_train)[0])

model.evaluate(X_test,Y_test)

print("Testing accuracy :",model.evaluate(X_test,Y_test)[1])

print("Testing loss :",model.evaluate(X_test,Y_test)[0])

y_pred_prob=model.predict(X_test)

y_pred=np.argmax(y_pred_cv, axis=-1)

print(classification_report(Y_test,y_pred))

print(confusion_matrix(Y_test,y_pred))

plt.figure(figsize=(7,5))

sns.heatmap(confusion_matrix(Y_test,y_pred),annot=True,cmap="OrRd_r",

fmt="d",cbar=True,

annot_kws={"fontsize":15})

plt.xlabel("Actual Result")

plt.ylabel("Predicted Result")

plt.show()

Then, I will save the model either by using pickle as follows-

# pickle_out = open("./my_model.pkl", mode = "wb")

# pickle.dump(my_model, pickle_out)

# pickle_out.close()

or as follows-

model.save('./my_model.h5')

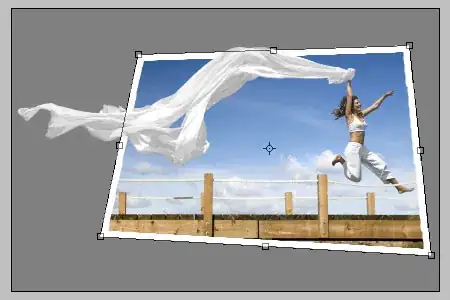

Now, I want to predict the label (i.e. 'yes', 'no', 'maybe' etc.) of output variable 'Existed' based on new input values (as shown in the following table) that will be provided by an user -

.

.

My question is that how should I save and load the model followed by predicting the labels for 'Existed' variable, so that it will automatically fill up the empty cell of Exited column with respective labels (i.e. 'yes', 'no', 'maybe' etc.).

I will appreciate your insightful comments on this post.