We created the GKE cluster with the public endpoint. The service account of the GKE cluster and Node pool has the following roles.

"roles/compute.admin",

"roles/compute.viewer",

"roles/compute.securityAdmin",

"roles/iam.serviceAccountUser",

"roles/iam.serviceAccountAdmin",

"roles/resourcemanager.projectIamAdmin",

"roles/container.admin",

"roles/artifactregistry.admin",

"roles/storage.admin"

The node pool of the GKE cluster has the following OAuth scopes

"https://www.googleapis.com/auth/cloud-platform",

"https://www.googleapis.com/auth/devstorage.read_write",

The private GCS bucket has the same service account as the principal, with the storage admin role.

When we try to read/write in this bucket from a GKE POD, we get the below error.

# Read

AccessDeniedException: 403 Caller does not have storage.objects.list access to the Google Cloud Storage bucket

# Write

AccessDeniedException: 403 Caller does not have storage.objects.create access to the Google Cloud Storage object

We also checked this thread but the solution was credential oriented and couldn't help us. We would like to read/write without maintaining the SA auth key or any sort of credentials.

Please guide what is missing here.

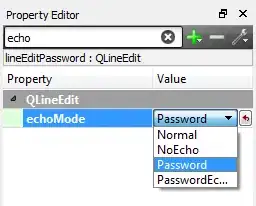

UPDATE: as per the suggestion by @boredabdel we checked and found that workload identity was already enabled on the GKE cluster as well as NodePool. We are using this module to create our cluster where it is already enabled by default. Still, we are facing connectivity issues.

Cluster Security:

NodePool Security: