I have had a hard time trying to follow links using the Scrapy Playwright to navigate a dynamic website.

I have read all issues on the Scrapy Playwright GitHub link to see if I can find a solution to my problem, but not yet.

OK here it is what I want to do:

I want to write a crawl spider that will get all available odds information from https://oddsportal.com/ website. Some pages on the website are rendered using JavaScript, so I decided to use Scrapy Playwright.

Step 1.

I sent a request to the url of the website(https://oddsportal.com/results/) to return the content of the site(all links that I need to follow).

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from scrapy_playwright.page import PageMethod

# from scrapy.utils.reactor import install_reactor

# install_reactor('twisted.internet.asyncioreactor.AsyncioSelectorReactor')

class OddsportalSpider(CrawlSpider):

name = 'oddsportal'

allowed_domains = ['oddsportal.com']

# start_urls = ['https://oddsportal.com/results/']

def start_requests(self):

url = 'https://oddsportal.com/results/'

yield scrapy.Request(url=url, meta= dict(playwright = True,

playwright_context = 1,

playwright_include_page = True,

playwright_page_methods = [

PageMethod('wait_for_selector', 'div#col-content')

]

))

Step 2

Now I need to follow all the above links

def set_playwright_true(request, response):

request.meta["playwright"] = True

return request

rules = (

Rule(LinkExtractor(restrict_xpaths="//div[@id= 'archive-tables']//tbody/tr[@xsid=1]/td/a"), callback='parse_item',follow=False, process_request=set_playwright_true),

)

async def parse_item(self, response):

item = {}

item['text'] = response.url

yield item

When I run the above script, It doesn't get all the links from the https://oddsportal.com/results/, What am I doing wrong here. I believe I am not following the links rightly. The restrict_xpaths in the LinkExtractor is correct, because without Playwright I am able to extract the links but it does not yield the full content of the page.

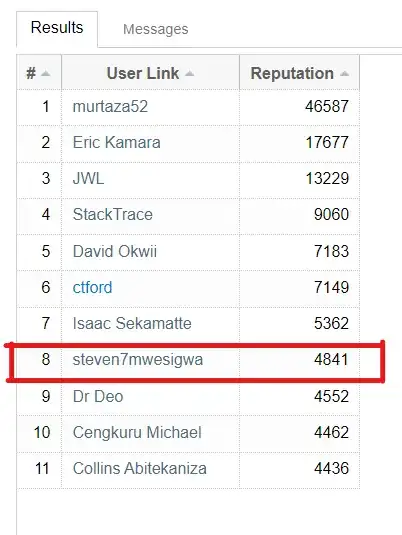

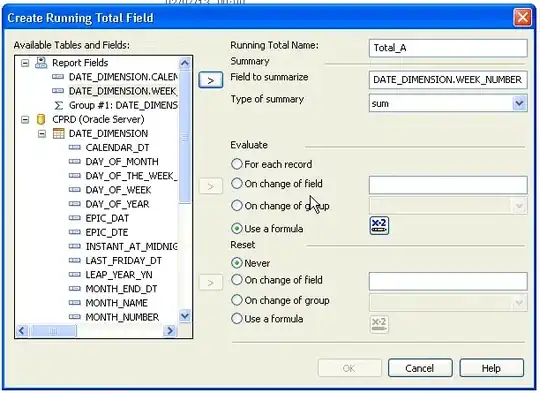

All the links in the first image will take me to a page like this, which is rendered with JavaScript