I'm trying to run a synapse pipeline from a synapse notebook, is there any way to do it?

My synapse pipeline has parameters, - if it's possible to run it from a notebook then how to pass the params?

I'm trying to run a synapse pipeline from a synapse notebook, is there any way to do it?

My synapse pipeline has parameters, - if it's possible to run it from a notebook then how to pass the params?

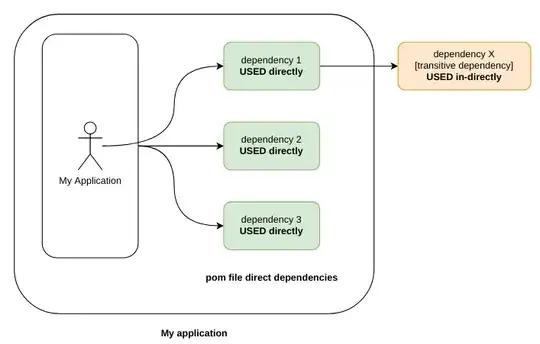

As suggested by Wbob, I would also recommend not doing this (calling synapse pipelines from synapse notebook) because of the spark pools.

But if you want to give a try, you can go through this approach other than REST API.

In this, I have added a Storage event trigger to synapse pipeline and it triggers by writing to the storage using synapse notebook code.

For this you required a Storage account and an empty container.

First create a linked service for ADLS Gen2 in the synapse and a Storage event trigger to the synapse pipeline.

In the Synapse notebook, write a json file to ADLS which triggers the pipeline.

You can pass the parameters from synapse notebook using the same json.

Code in the Synapse notebook with sample parameters:

from pyspark.sql.types import *

myjson=[{"Name":"Rakesh","Age":22,"Marks":90}]

schema2=StructType([StructField('Name',StringType(),True),StructField('Age',IntegerType(),True),StructField('Marks',IntegerType(),True)])

df = spark.createDataFrame(data=myjson,schema=schema2)

df.show(truncate=False)

df2=df.toPandas()

df2.reset_index().to_json('abfss://input/myjson.json', storage_options = {'linked_service' : 'AzureDataLakeStorage1'},orient='records')

Here input is my container and AzureDataLakeStorage1 is my linked service. orient='records' gives the indexes with the parameters.

This will create the myjson.json file in the ADLS container like below.

The above file triggers the Synapse pipeline. To use the parameters that we pass, use lookup activity in the pipeline.

Lookup activity:

In the lookup, don't give any dataset value in the dataset. give the wildcard path file as *.json.

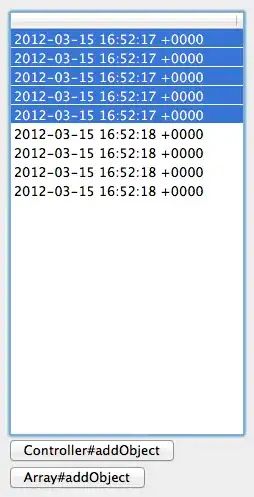

Look up will give the result like this:

You can use the expression @activity('Lookup1').output.value[0].Age and

@activity('Lookup1').output.value[0].Name like these to access the parameters with their own data type in the synapse pipeline.

For Example in set variable activity:

Output:

As variables only supports Strings, Booleans and Arrays I have converted it to string for showing the output. You can use those parameters wherever you want.