I have a data frame in pyspark where I have columns like Quantity1, Quantity 2, ......Quantity. I just want to sum up the previous 5 quantity fields value in these Quantity fields. So in this case I have to do a Column by Lead or Lag but I haven't found any way to do the same. If anyone has any idea or alternate way of doing this in pyspark or SQL please suggest.

Example: Input dataset

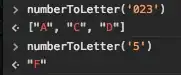

Bucket Size=2

Bucket Size=2

Explanation: Bucket1 = Qty1+Qty2

Bucket2 = Qty2+Qty3

Bucket3 = Qty3+Qty4

BucketN = QtyN+Qty(N+1)