I'm following the answer to this question and this scikit-learn tutorial to remove artifacts from an EEG signal. They seem simple enough, and I'm surely missing something obvious here.

The components extracted don't have the same length as my signal. I have 88 channels of several hours of recordings, so the shape of my signal matrix is (88, 8088516). Yet the output of ICA is (88, 88). In addition to being so short, each component seems to capture very large, noisy-looking deflections (so out of 88 components only a couple actually look like signal, the rest look like noise). I also would have expected only a few components to look noisy. I suspect I'm doing something wrong here?

The matrix of (channels x samples) has shape (88, 8088516).

Sample code (just using a random matrix for minimum working purposes):

import numpy as np

from sklearn.decomposition import FastICA

import matplotlib.pyplot as plt

samples_matrix = np.random.random((88, 8088516))

# Compute ICA

ica = FastICA(n_components=samples_matrix.shape[0]) # Extracting as many components as there are channels, i.e. 88

components = ica.fit_transform(samples_matrix) # Reconstruct signals

A_ = ica.mixing_ # Get estimated mixing matrix

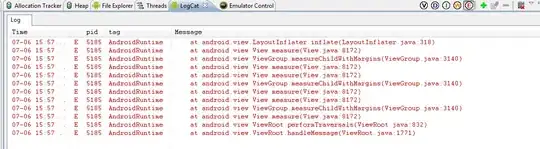

The shape of components is (88, 88). One plotted looks like this:

plt.plot(components[1])

I would have expected that the components be time series of the same length as my original, as shown in the answer to this question. I'm really not sure how to move forward with component removal and signal reconstruction at this point.