I'm using the trust-constr algorithm from scipy.optimize.minimize with an interval constraint (lowerbound < g(x) < upperbound).

I would like to plot the Lagrangian in a region around the found solution to analyze the convergence behavior.

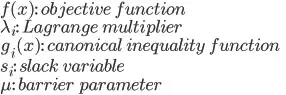

According to my knowledge, the Lagrangian is defined as:

with:

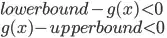

In the returned OptimizeResult object, I can find the barrier parameter, but the slack variables are missing. The Lagrange multipliers are present, but there is only one per interval constraint, while I would expect two since each interval constraint is converted to two canonical inequality constraints:

Clearly, I'm missing something, so any help would be appreciated.

Minimal reproducible example:

import scipy.optimize as so

import numpy as np

# Problem definition:

# Five 2D points are given, with equally spaced x coordinates.

# The y coordinate of the first point is zero, while the last point has value 10.

# The goal is to find the smallest y coordinate of the other points, given the

# difference between the y coordinates of two consecutive points has to lie within the

# interval [-3, 3].

xs = np.linspace(0, 4, 5)

y0s = np.zeros(xs.shape)

y0s[-1] = 10

objective_fun = lambda y: np.mean(y**2)

def constraint_fun(ys):

'''

Calculates the signed squared consecutive differences of the input vector, augmented

with the first and last element of y0s.

'''

full_ys = y0s.copy()

full_ys[1:-1] = ys

consecutive_differences = full_ys[1:] - full_ys[:-1]

return np.sign(consecutive_differences) * consecutive_differences**2

constraint = so.NonlinearConstraint(fun=constraint_fun, lb=-3**2, ub=3**2)

result = so.minimize(method='trust-constr', fun=objective_fun, constraints=[constraint], x0=y0s[1:-1])

# The number of interval constraints is equal to the size of the output vector of the constraint function.

print(f'Nr. of interval constraints: {len(constraint_fun(y0s[1:-1]))}')

# Expected nr of Lagrange multipliers: 2x number of interval constraints.

print(f'Nr. of Lagrange multipliers: {len(result.v[0])}')

Output:

Nr. of interval constraints: 4

Nr. of Lagrange multipliers: 4

Expected output:

Nr. of interval constraints: 4

Nr. of Lagrange multipliers: 8