tldr: I am numerically estimating a PDF from simulated data and I need the density to monotonically decrease outside of the 'main' density region (as x-> infinity). What I have yields a close to zero density, but which does not monotonically decrease.

Detailed Problem

I am estimating a simulated maximum likelihood model, which requires me to numerically evaluate the probability distribution function of some random variable (the probability of which cannot be analytically derived) at some (observed) value x. The goal is to maximize the log-likelihood of these densities, which requires them to not have spurious local maxima.

Since I do not have an analytic likelihood function I numerically simulate the random variable by drawing the random component from some known distribution function, and apply some non-linear transformation to it. I save the results of this simulation in a dataset named simulated_stats.

I then use density() to approximate the PDF and approxfun() to evaluate the PDF at x:

#some example simulation

Simulated_stats_ <- runif(n=500, 10,15)+ rnorm(n=500,mean = 15,sd = 3)

#approximation for x

approxfun(density(simulated_stats))(x)

This works well within the range of simulated simulated_stats, see image: Example PDF. The problem is I need to be able to evaluate the PDF far from the range of simulated data.

So in the image above, I would need to evaluate the PDF at, say, x=50:

approxfun(density(simulated_stats))(50)

> [1] NA

So instead I use the from and to arguments in the density function, which correctly approximate near 0 tails, such

approxfun(

density(Simulated_stats, from = 0, to = max(Simulated_stats)*10)

)(50)

[1] 1.924343e-18

Which is great, under one condition - I need the density to go to zero the further out from the range x is. That is, if I evaluated at x=51 the result must be strictly smaller. (Otherwise, my estimator may find local maxima far from the 'true' region, since the likelihood function is not monotonic very far from the 'main' density mass, i.e. the extrapolated region).

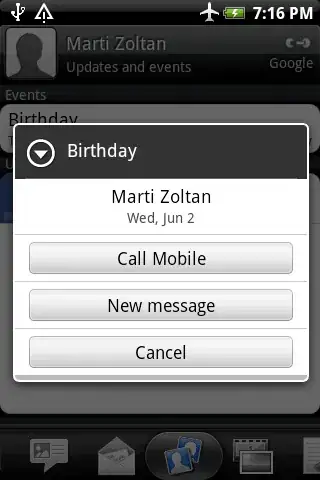

To test this I evaluated the approximated PDF at fixed intervals, took logs, and plotted. The result is discouraging: far from the main density mass the probability 'jumps' up and down. Always very close to zero, but NOT monotonically decreasing.

a <- sapply(X = seq(from = 0, to = 100, by = 0.5), FUN = function(x){approxfun(

density(Simulated_stats_,from = 0, to = max(Simulated_stats_)*10)

)(x)})

aa <- cbind( seq(from = 0, to = 100, by = 0.5), a)

plot(aa[,1],log(aa[,2]))

Result: Non-monotonic log density far from density mass

My question

Does this happen because of the kernel estimation in density() or is it inaccuracies in approxfun()? (or something else?)

What alternative methods can I use that will deliver a monotonically declining PDF far from the simulated density mass?

Or - how can I manually change the approximated PDF to monotonically decline the further I am from the density mass? I would happily stick some linear trend that goes to zero...

Thanks!