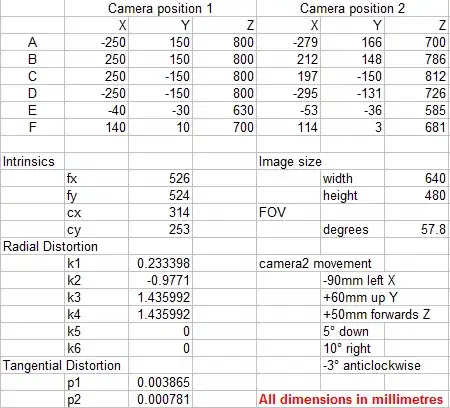

I'm using xgb and have hypertuned my parameters using hyperopt, however when I plot the the train set and validation set after fitting my model, I noticed that the lines intersect with each other, what does that mean? Also the validation line doesn't start near the training line.

I'm using early_stopping_rounds = 20 when I fit my model prior to plotting this graph.

The hyperparameters I got from HyperOpt are as follows:

{'booster': 'gbtree', 'colsample_bytree': 0.8814444518931106, 'eta': 0.0712456143241873, 'eval_metric': 'ndcg', 'gamma': 0.8925113465433823, 'max_depth': 8, 'min_child_weight': 5, 'objective': 'rank:pairwise', 'reg_alpha': 2.2193560083517383, 'reg_lambda': 1.8600142721064354, 'seed': 0, 'subsample': 0.9818535865621624}

I thought Hyperopt should be giving me the best parameters. What can I possibly change to improve this?

Edit

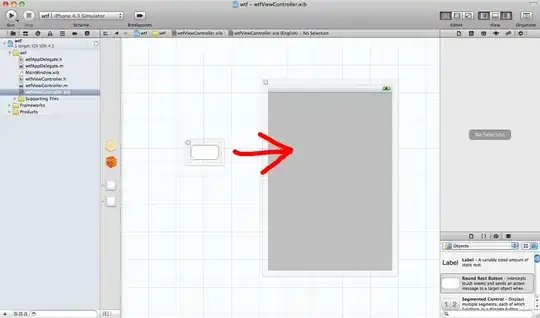

I changed n_estimators from 527 to 160, and it is giving me this graph now. But I'm not sure if this graph is okay? Any advice is much appreciated!