Firstly, there is a little misunderstanding of the OS and Kernel patching process.

In my understanding, it works by cordoning and draining the node, and the pods are scheduled on a new node with the older version.

The new node that is/are added should come with the latest node image version with latest security patches (which usually does not fall back to an older kernel version) available for the node pool. You can check out the AKS node image releases here. Reference

However, it is not necessary that the pod(s) evicted by the drain operation from the node that is being rebooted at any point during the process has to land on the surge node. Evicted pod(S) might very well be scheduled on an existing node should the node fit the bill for scheduling these pods.

For every Pod that the scheduler discovers, the scheduler becomes responsible for finding the best Node for that Pod to run on. The scheduler reaches this placement decision taking into account the scheduling principles described here.

The documentation, at the time of writing, might be a little misleading on this.

About the error:

Reason: ScaleDownFailed

Message: failed to drain the node, aborting ScaleDown

This might happen due to a number of reasons. Common ones might be:

The scheduler could not find a suitable node to place evicted pods and the node pool could not scale up due to insufficient compute quota available. [Reference]

The scheduler could not find a suitable node to place evicted pods and the cluster could not scale up due to insufficient IP addresses in the node pool's subnet. [Reference]

PodDisruptionBudgets (PDBs) did not allow for at least 1 pod replica to be moved at a time causing the drain/evict operation to fail. [Reference]

In general,

The Eviction API can respond in one of three ways:

- If the eviction is granted, then the Pod is deleted as if you sent a

DELETE request to the Pod's URL and received back 200 OK.

- If the current state of affairs wouldn't allow an eviction by the rules set forth in the budget, you get back

429 Too Many Requests. This is typically used for generic rate limiting of any requests, but here we mean that this request isn't allowed right now but it may be allowed later.

- If there is some kind of misconfiguration; for example multiple PodDisruptionBudgets that refer the same Pod, you get a

500 Internal Server Error response.

For a given eviction request, there are two cases:

- There is no budget that matches this pod. In this case, the server always returns

200 OK.

- There is at least one budget. In this case, any of the three above responses may apply.

Stuck evictions

In some cases, an application may reach a broken state, one where unless you intervene the eviction API will never return anything other than 429 or 500.

For example: this can happen if ReplicaSet is creating Pods for your application but the replacement Pods do not become Ready. You can also see similar symptoms if the last Pod evicted has a very long termination grace period.

How to investigate further?

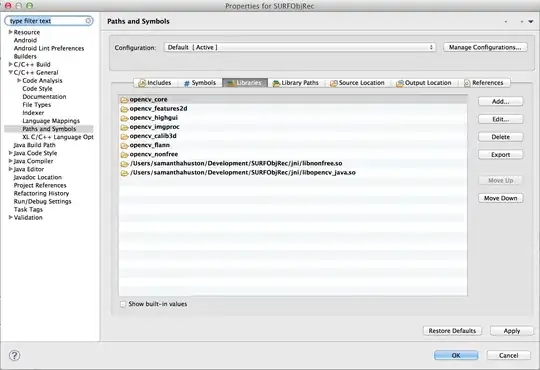

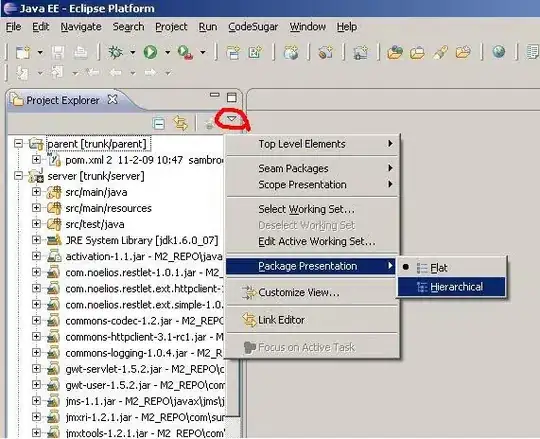

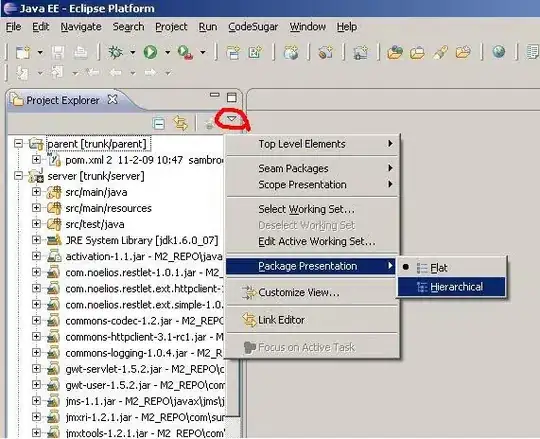

On the Azure Portal navigate to your AKS cluster

Go to Resource Health on the left hand menu as shown below and click on Diagnose and solve problems

You should see something like the following

If you click on each of the options, you should see a number of checks loading. You can set the time frame of impact on the top right hand corner of the screen as shown below (Please press the Enter key after you have set the correct timeframe). You can click on the More Info link on the right hand side of each entry for detailed information and recommended action.

How to mitigate the issue?

Once you have identified the issue and followed the recommendations to fix the same, please perform an az aks upgrade on the AKS cluster to the same Kubernetes version it is currently running. This should initiate a reconcile operation wherever required under the hood.