I am working with 2D convex polygons, and have two sets of points surrounding it, lets call the neighborhood closest to the polygon A and the one further out B. These sets of points are offsets of the original polygon, the offsetting itself was done using Shapely in Python (which admittedly does not do a great job, if anyone knows of any alternatives please do comment).

What I would like to do now is for each point in A, find the point in B that is closest to it in normal direction of the polygon's edges. I've attached some images below that explain what I'm doing better than I can in words.

So far I've attempted to do this myself and get poor results. My approach is for each point in A, find its two closest vertices using a nearest neighbor search, these two points can be used to calculate a slope. I find the line perpendicular to this slope anchored at the point A I am currently iterating through. I then construct a bunch of points along this line and find the point in B that is closest to it. To make sure I am finding points in the right direction, I actually find the two closest points in B and chose the one such that its distance to the point I'm iterating through in A is minimized. This is done for all points in A.

A few shortcomings of this approach:

- If a point is exactly at a vertex it fails and gives garbage

- If there is a long edge and the point is near the beginning or end of this edge, it might chose the wrong two vertices to calculate the slope.

This leads me to believe there must be a better way. Here is an image explaining what I would like to do:

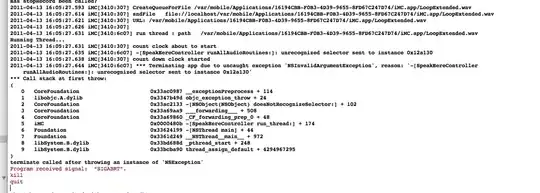

The red dashed line is an example of the convex polygon I am working with. The black points represent the smaller offset, A, and the white points are the bigger offset, B. The green points are the points in B that my code currently identifies as the normal points, though they are clearly wrong. I have drawn in the blue arrows to show what I mean by the normal direction of each edge. As en example of the code's shortcoming, you can see how at the rightmost points where two points reside exactly at a vertex the code doesn't choose the points we would expect.

Here is a copy of the code itself:

def Nearest_Points(inner, outer, vertices):

step = 0.1

near_points = []

tree = KDTree(vertices)

line_tree = KDTree(outer_points)

for point in inner_points:

#Finding closest vertices to point

dist, index = tree.query(point, k = 2)

nearest_vertex1 = vertices[index[0]]

nearest_vertex2 = vertices[index[1]]

#Constructing line perpendicular to edge and anchored at point

dy = nearest_vertex2[1]-nearest_vertex1[1]

dx = nearest_vertex2[0]-nearest_vertex1[0]

line_points = []

if dy != 0:

for i in range(-50, 51):

x = point[0]+i*step

y = -dx/dy*(x-point[0])+point[1]

line_points.append([x, y])

else:

for i in range(-50, 51):

line_points.append([point[0], point[1]+step*i])

#Finding the two points in outer neighborhood closest to line

dist2, index_2 = line_tree.query(line_points, k = 2)

dist2_arr = np.asarray(dist2)

min_1 = np.min(dist2_arr[:,0])

min_2 = np.min(dist2_arr[:,1])

for i in range(len(dist2)):

if dist2[i][0] == min_1:

index_2_1 = i

if dist2[i][1] == min_2:

index_2_2 = i

near1 = outer_points[index_2[index_2_1][0]]

near2 = outer_points[index_2[index_2_2][1]]

#Of these two points, finding the one closest to the point currently being iterated through

near1_dist = (near1[0]-point[0])**2 + (near1[1]-point[1])**2

near2_dist = (near2[0]-point[0])**2 + (near2[1]-point[1])**2

if near1_dist < near2_dist:

near_points.append(near1)

else:

near_points.append(near2)

return near_points

Thank you.