I wanna know if the matrix of the attn_output_weight can demonstrate the relationship between every word-pair in the input sequence.

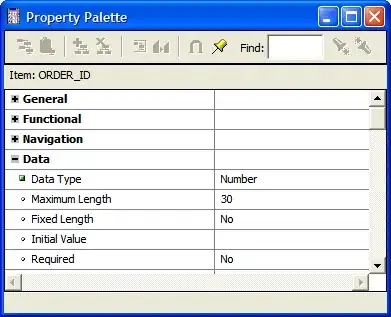

In my project, I draw the heat map based on this output and it shows like this:

However, I can hardly see any information from this heat map.

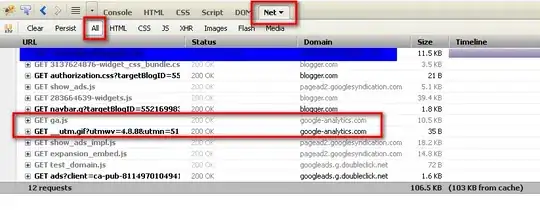

I refer to other people's work, their heat map is like this. At least the diagonal of the matrix should have the deep color.

Then I wonder if my method to draw the heat map is correct or not (i.e. directly using the output of the attn_output_weight ) If this is not the correct way, could you please tell me how to draw the heat map?