When plotting the first tree from a regression using create_tree_digraph, the leaf values make

no sense to me. For example:

from sklearn.datasets import load_boston

X, y = load_boston(return_X_y=True)

import lightgbm as lgb

data = lgb.Dataset(X, label=y)

bst = lgb.train({}, data, num_boost_round=1)

lgb.create_tree_digraph(bst)

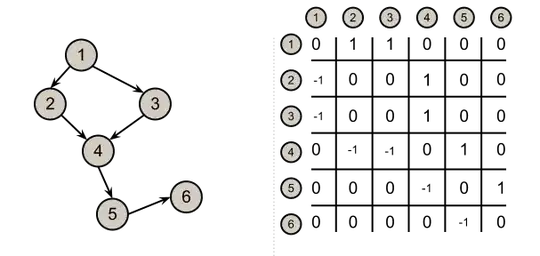

Gives the following tree:

Focusing on leaf 3, for example, it seems like these are the fitted values:

bst.predict(X, num_iteration=0)[X[:,5]>7.437]

array([24.78919238, 24.78919238, 24.78919238, 24.78919238, 24.78919238,

24.78919238, 24.78919238, 24.78919238, 24.78919238, 24.78919238,

24.78919238, 24.78919238, 24.78919238, 24.78919238, 24.78919238,

24.78919238, 24.78919238, 24.78919238, 24.78919238, 24.78919238,

24.78919238, 24.78919238, 24.78919238, 24.78919238, 24.78919238,

24.78919238, 24.78919238, 24.78919238, 24.78919238, 24.78919238])

But these seem like terrible predictions compared to the obvious and trivial method of taking the mean:

y[X[:,5]>7.437]

array([38.7, 43.8, 50. , 50. , 50. , 50. , 39.8, 50. , 50. , 42.3, 48.5,

50. , 44.8, 50. , 37.6, 46.7, 41.7, 48.3, 42.8, 44. , 50. , 43.1,

48.8, 50. , 43.5, 35.2, 45.4, 46. , 50. , 21.9])

y[X[:,5]>7.437].mean()

45.09666666666667

What am I missing here?