This is a follow-up to an earlier specific question, but as I add more complexity to the problem formulation, I realize that I need to take a step back and consider whether cvxpy is the best tool for my problem.

What I'm trying to solve: create the largest cluster of a category and company where the average values are above a particular threshold. The trick is that if we include particular categories for a company in the cluster, in order to add another company, that company also should have high values for the same categories.

I've formulated this as an integer linear optimization problem, where I've expanded out all the variables. There are 2 main problems:

- Constraint 8 violates the DCP rule. Constraint 8 is intended to keep the values included in the cluster above a particular threshold. I check this by taking the average of the non-zero variables.

- The actual problem will require thousands of variables and constraints to be specified (there are 100 categories and >10K companies). This makes me question whether this is the correct approach at all.

import numpy as np

import cvxpy as cp

import cvxopt

util = np.array([0.7, 0.95, 0.3, 2, 1.05, 2.2, 4, 1, 3])

# The variable we are solving for

dec_vars = cp.Variable(len(util), boolean = True)

tot_util = util @ dec_vars

is_zero_bucket1= cp.Variable(1, boolean = True)

is_zero_bucket2= cp.Variable(1, boolean = True)

is_zero_bucket3= cp.Variable(1, boolean = True)

is_zero_cat1 = cp.Variable(1, boolean=True)

is_zero_cat2 = cp.Variable(1, boolean=True)

is_zero_cat3 = cp.Variable(1, boolean=True)

# at least 2 categories should be included for a given company

constr1 = sum(dec_vars[0:3]) >= 2 * is_zero_bucket1

constr2 = sum(dec_vars[3:6]) >= 2 * is_zero_bucket2

constr3 = sum(dec_vars[6:9]) >= 2 * is_zero_bucket3

# at least 2 columns (companies) for a given category that's selected

constr4 = dec_vars[0] + dec_vars[3] + dec_vars[6] >= 2 * is_zero_cat1

constr5 = dec_vars[1] + dec_vars[4] + dec_vars[7] >= 2 * is_zero_cat2

constr6 = dec_vars[2] + dec_vars[5] + dec_vars[8] >= 2 * is_zero_cat3

constr7 = np.ones(len(util)) @ dec_vars >= 2

constr8 = (tot_util/cp.sum(dec_vars)) >= 1.3

cluster_problem = cp.Problem(cp.Maximize(tot_util), [constr1, constr2, constr3, constr4, constr5, constr6, constr8])

cluster_problem.solve()

Update

I modified as per the suggestions. The problem now is that the decision variables matrix itself doesn't incorporate the rules for adjacent companies / categories. To do that, I can make the objective function the product of the final decision vars matrix and the zero_comp_vars and zero_cat_vars vectors. However, getting an Problem does not follow DCP rules error when I do this. Must be because of multiplying the 2 matrices by the two vectors. Also, I wonder whether there is a way to get the program to zero out the variables in the appropriate places in the decision matrix when they don't meet the criteria for at least 2 companies and 3 categories to be non-zero in order to be included. As it stands, the decision variables matrix will have 1s for rows/columns that are actually zeroed out by the zero_comp_vars and zero_cat_vars variables

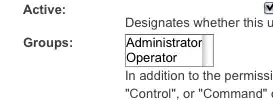

util = np.array([[0.7, 0.95, 0.3], [2, 1.05, 2.2], [4, 1, 3]])

# The variable we are solving for

dec_vars = cp.Variable(util.shape, boolean = True)

zero_comp_vars = cp.Variable(util.shape[0], boolean = True)

zero_cat_vars = cp.Variable(util.shape[1], boolean = True)

# define constraints

zero_comp_constr = cp.sum(dec_vars, axis=1) >= 2 * zero_comp_vars

zero_cat_constr = cp.sum(dec_vars, axis=0) >= 3 * zero_cat_vars

# need the following two constraints, otherwise all the values in the zero_comp_constr and zero_cat_constr vectors can be 0

above_one_non_zero_comp = cp.sum(zero_comp_vars) >= 1

above_one_non_zero_cat = cp.sum(zero_cat_vars) >= 1

# min tin array

min_comp_array=np.empty(dec_vars.shape[0])

min_comp_array.fill(2)

min_comp_constr = cp.sum(dec_vars, axis=1) >= min_comp_array

tot_avg_constr = tot_util >= 2.0 * cp.sum(dec_vars)

# this is the section that gives error

temp = cp.multiply(util, dec_vars)

temp2 = cp.multiply(temp, cp.reshape(zero_comp_vars, (3,1)))

temp3 = cp.multiply(temp2, cp.reshape(zero_cat_vars, (3,1)))

tot_util = cp.sum(temp3)

#tot_util = cp.sum(cp.multiply(util, dec_vars))

cluster_problem = cp.Problem(cp.Maximize(tot_util), [zero_comp_constr, zero_cat_constr,

above_one_non_zero_comp, above_one_non_zero_cat,

min_comp_constr, tot_avg_constr])

cluster_problem.solve()