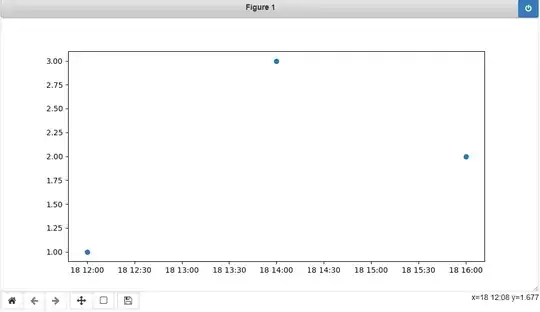

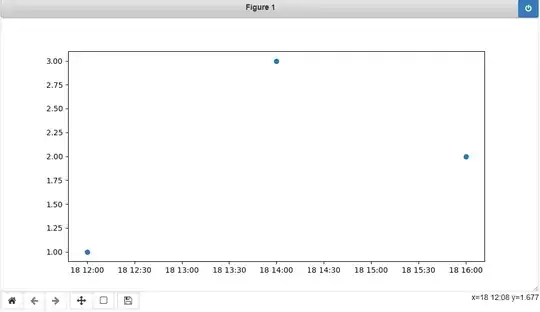

You're not doing anything wrong in particular (except maybe the objectif parameter for xgboost which doesn't exist), however, you have to consider how xgboost works. It will try to create "trees". Trees have splits based on the values of the features. From the plot you show here, it looks like there are very few samples that go above 0. So making a test train split random will likely result in a test set with virtually no samples with a value above 0 (so a horizontal line).

Other than that, it seems you want to fit a linear model on non-linear data. Selecting a different objective function is likely to help with this.

Finally, how do you know that your model is not learning anything? I don't see any evaluation metrics to confirm this. Try to think of meaningful evaluation metrics for your model and show them. This will help you determine if your model is "good enough".

To summarize:

- Fix the imbalance in your dataset (or at least take it into consideration)

- Select an appropriate objective function

- Check evaluation metrics that make sense for your model

From this example it looks like your model is indeed learning something, even without parameter tuning (which you should do!).

import pandas

import xgboost

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, r2_score

# Read the data

df = pandas.read_excel("./data.xlsx")

# Split in X and y

X = df.drop(columns=["HITS_DAY"])

y = df["HITS_DAY"]

# Show the values of the full dataset in a plot

y.sort_values().reset_index()["HITS_DAY"].plot()

# Split in test and train, use stratification to make sure the 2 groups look similar

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.20, random_state=42, stratify=[element > 1 for element in y.values]

)

# Show the plots of the test and train set (make sure they look similar!)

y_train.sort_values().reset_index()["HITS_DAY"].plot()

y_test.sort_values().reset_index()["HITS_DAY"].plot()

# Create the regressor

estimator = xgboost.XGBRegressor(objective="reg:squaredlogerror")

# Fit the regressor

estimator.fit(X_train, y_train)

# Predict on the test set

predictions = estimator.predict(X_test)

df = pandas.DataFrame({"ACTUAL": y_test, "PREDICTED": predictions})

# Show the actual vs predicted

df.sort_values("ACTUAL").reset_index()[["ACTUAL", "PREDICTED"]].plot()

# Show some evaluation metrics

print(f"Mean squared error: {mean_squared_error(y_test.values, predictions)}")

print(f"R2 score: {r2_score(y_test.values, predictions)}")

Output:

Mean squared error: 0.01525351142868279

R2 score: 0.07857787102063485