I have data with a clear exponential dependency. I tried to fit a curve through it with two different, very simple models.

The first one is a straight forward exponential fit. For the second one, I log transformed the y values and then used a linear regression.

To eventually plot the line, I raised my result to the power of e.

However, when plot both resulting regression lines, they look quite different. Also there r^2 value is quite different.

Can somebody explain to me why the fit is so different? I honestly thought the same curve should result from both models.

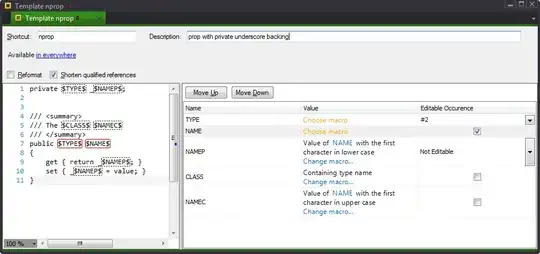

Below is the code that I used to generate the curves.

import matplotlib.pyplot as plt

import numpy as np

from scipy.optimize import curve_fit

import math

from sklearn.metrics import r2_score

def exp(x, k):

return np.exp(k * x)

def lin(x, m):

return m * x

x = np.array([0.03553744809541667, 0.07393361944488888, 0.11713398354352941, 0.1574279442442857, 0.20574484316400002,

0.24638269718399997, 0.28022173237600007, 0.33088392763600005, 0.37608523866, 0.4235348808,

0.4698941935266667,

0.5049780023645001, 0.53193232248, 0.59661874698, 0.64686695376, 0.6765964062965002, 0.7195010072795001,

0.7624056082625001, 0.8053102092455002, 0.8696671107200001])

y = np.array([1.0, 0.9180040755624065, 0.7580780029008654, 0.662359339541471, 0.556415757973503, 0.4575163368602455,

0.3982995279500034, 0.3309496816813175, 0.25142343840921577, 0.21526738042912116, 0.19490849614884595,

0.12714651046365663, 0.12714651046365663, 0.1015770731180174, 0.0728982261567812, 0.04180399979351543,

0.04180399979351543, 0.04180399979351543, 0.04180399979351543, 0.04180399979351543])

k_exp = curve_fit(exp, x, y)[0]

m_lin = curve_fit(lin, x, np.log(y))[0]

x_ticks = np.linspace(x.min(), x.max(), 100)

print("Exponential fit", r2_score(y, [exp(i, k_exp) for i in x])) #0.964

print("Log linear fit", r2_score(y, [np.exp(i * m_lin) for i in x])) #0.939

plt.scatter(x, y, c="k", s=5)

plt.plot(x_ticks, exp(x_ticks, k_exp), "r--", label="Exponential fit")

plt.plot(x_ticks, [np.exp(x * m_lin) for x in x_ticks], label="Log-linear fit")

plt.legend()

plt.show()