I'm using Matlab command fitcdiscr to implement an LDA with 379 features and 8 classes. I would like to get a global weight for each feature, to investigate their influence in the prediction. How can I obtain it from the pairwise (for each pair of classes) coefficients in the field Coeffs of the ClassificationDiscriminant object?

-

What is a "global weight" for you ? The eigenvalues ? – obchardon Oct 29 '20 at 18:45

-

Hi! I meant a weight (or score) for each feature that will allow me to rank features based on their importance for classification. I found now weights for the 8x7/2=28 pairwise classifiers (all combinations of the 8 classes) but I was wondering if I can combine them in some meaningful way to get a "global" score for the feature in the classification of the 8 classes. My reasoning might be wrong and not applicable to LDA, sorry, I'm not an expert on this! Thanks in advance for any tips. – Giulia Nov 03 '20 at 08:07

-

I think that you miss some important concepts here. The goal of a LDA is to linearly combine the features to obtain some new features that better differenciate each class ! Check my answer. – obchardon Nov 03 '20 at 15:01

1 Answers

It looks like fitcdiscr does not output the eigenvalues or the eigenvectors.

I'm not going to explain what are eigenvectors and eigenvalues here, since there is plenty of documentation on the web. But basically the produced eigenvectors will determine the axis that maximize the distance between each class.

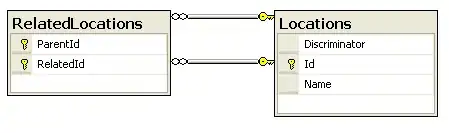

I've writted a minimal (inspired by this excellent article) example that output both of them:

% We load the fisheriris dataset

load fisheriris

feature = meas; % 150x4 array

class = species; % 150x1 cell

% Extract unique class and the corresponding index for each feature.

[ucl,~,idc] = unique(class);

% Number of parameter and number of class

np = size(meas,2);

nc = length(ucl);

% Mean by class

MBC = splitapply(@mean,feature,idc);

% Compute the Within class Scatter Matrix WSM

WSM = zeros(np);

for ii = 1:nc

FM = feature(idc==ii,:)-MBC(ii,:);

WSM = WSM + FM.'*FM;

end

WSM

% Compute the Between class Scatter Matrix

BSM = zeros(np);

GPC = accumarray(idc,ones(size(classe)));

for ii = 1:nc

BSM = BSM + GPC(ii)*((MBC(ii,:)-mean(feature)).'*(MBC(ii,:)-mean(feature)));

end

BSM

% Now we compute the eigenvalues and the eigenvectors

[eig_vec,eig_val] = eig(inv(WSM)*BSM)

% Compute the new feature:

new_feature = feature*eig_vec

With:

eig_vec =

[-0.2087 -0.0065 0.7666 -0.4924 % -> feature 1

-0.3862 -0.5866 -0.0839 0.4417 % -> feature 2

0.5540 0.2526 -0.0291 0.2875 % -> feature 3

0.7074 -0.7695 -0.6359 -0.5699] % -> feature 4

% So the first new feature is a linear combination of

% -0.2087*feature1 + -0.3862*feature2 + 0.5540*feature3 + 0.7074*feature4

eig_val =

[ 32.1919 % eigen value of the new feature 1

0.2854 % eigen value of the new feature 2

0.0000 % eigen value of the new feature 3

-0.0000] % eigen value of the new feature 4

In this case we have 4 features, here is the histogram of this 4 features (1 class = 1 color):

We see that the feature 3 and 4 are pretty good if we want to distinguish the different class but not perfect.

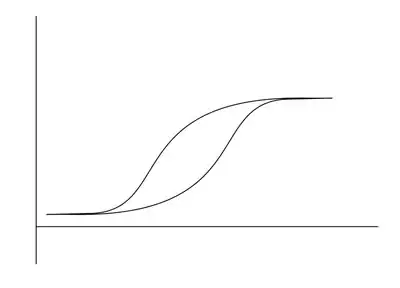

Now, after the LDA, we have those new features:

And we see that almost all the information have been gathered in the first new feature (new feature 1). All the other feature are pretty useless, so keep only the new feature 1 and remove the other one. We now have a 1D dataset instead of a 4D dataset.

- 10,614

- 1

- 17

- 33

-

Thanks a lot, all clear now, very useful. So, by looking at the first (most discriminant) eigenvectors (the ones with higher eigenvalues) I could have an idea of the (old) features contributing the most to the discrimination (the ones with higher "weight" in the linear combination). – Giulia Nov 04 '20 at 10:28

-

yes exactly ! But noticed that in practical case, you often keep more than one eigenvectors (if we have several eigenvectors that have a big associated eigenvalue). If you do `eig_vec*diag(eig_val)` you will obtain a good estimation of "how relatively important a feature is". – obchardon Nov 04 '20 at 12:26