First of all, just to make sure everyone is on the same page, compact letter displays (CLDs) are constructed in such a way that two means that share the same letter are not "significantly different" from one another at the stated significance level.

In a nice balanced situation with homogeneous error variance (that is, the SE of each comparison is the same), then, no matter what, all of the groups will be cohesive, in the sense that if two means share a grouping letter, all means between them share the same grouping letter. However, in the case where the SEs for comparing two means are not all the same, then there can be gaps in the groupings; and when there are huge disparities among those SEs compared with the differences among the means, such gaps are almost certain to occur, leading to what may be regarded as "crazy" compact letter displays.

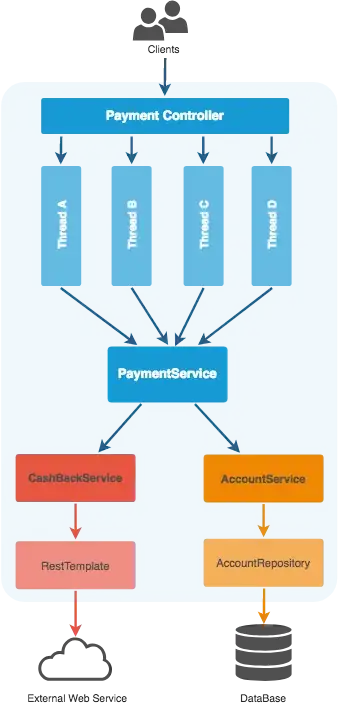

To understand the specifics in what we see here, first note that in this simple model, the fitted values are exactly the same as the estimated marginal means (EMMs); thus, the vertical stacks of points in the residuals-versus-fitted plot appear in exactly the same order, left-to-right, as the top-to-bottom order of the cld(interBoxMusling) call. This makes it easy to identify what is going on with each entry. For example, the leftmost stack of points in the plot corresponds to station 7, whose EMM is 6.87, and for which there is quite a bit of data. Note also that Station 7 has only one grouping letter, a, so it is grouped with stations 11, 1, 10, 12, and 8, but statistically different from the other stations. There are gaps in the a grouping, the first one occurring with station 4 (4th from the left in the plot) which has an EMM of 7.89; there is quite a bit of data on that station as well as station 7, so those two stations are significantly different and hence not in the same group.

But what about stations 12 and 8, which are quite a ways down the line, and the two EMMs to either side of the "9" tick mark in the plot? They are also in group a. In the plot, observe that there are only 2 observations in each of those stations. That makes it hard for those stations to differ significantly from other means -- there just isn't much data to indicate there'd be a difference, and statistical significance, above all, has to do with whether there is evidence (data) to conclude a difference. In fact, due to the small sample sizes and that those two stations are midway in the list of means, they do not differ statistically from any other station -- they share every single grouping letter.

The largest mean belongs to station 15,and there is just one observation for that station (note that the SE of that mean is greater than any other). But because it is the largest EMM, it still differs significantly from some of the others, and does not share grouping letters a, b, or c.

In general, if you look at the means with the most grouping letters, those tend to be the ones with the smallest sample sizes, and vice versa.

It is the disparities in sample sizes that make the CLD look "crazy." I suggest that this is an example showing how CLDs can be confusing and that is one of several undesirable qualities of them. The others I would mention are:

- CLDS rely on a hard cutoff in P values, in this case 0.05, whereas some other ways of displaying P values allow for a gray area in judging significance.

- CLDs highlight non-findings rather than findings. Two means being not statistically different is not a finding, it is a situation where we can't say anything definitive. And that's really why the CLD looks crazy in a situation like this. Grouping letters make it appear that those means belong together, when in fact they simply fail to not belong together.

Another somewhat unrelated point: the craziness of the CLD in this example has nothing to do with unequal variances. That is because the results displayed depend only on the model that was fitted to the data, and the aov() model presumes that all observations have the same variance. This is notable again in stations 12 and 8, which each have two observations, but they are quite close together in station 8 and farther apart in station 12. The table results show the same SE for both EMMs, and that's because they have the same sample size. Had we used a model that allowed different variances, then those SEs would have been different. The CLD display would have been different too: still crazy, but crazy in a different way.

By the way, the bar graph shown is unsuitable for this analysis for exactly the above reasons, that it estimates a different variance for each station. Note that the error bars for stations 8 and 12 are very different, even though the tabled SEs are the same. (And note there is no error bar at all for station 15, because there is only one observation and thus no error-variance estimate.) When presenting analyses, it is important for all of the results displayed are based on the same model, unless it is clearly stated that different models are being considered.

The emmeans package does offer other ways of displaying pairwise comparisons reasonably compactly. Two to consider here are pwpp() (pairwise P-value plot), which shows P values graphically, and pwpm(), which shows them in matrix form. And of course, the pairs() or summary() functions are available for a detailed, less compact presentation. The plot() function has a comparisons option that displays "comparison arrows", but it doesn't always work and almost certainly would fail with this example.