Query is really simple i.e

SELECT

col1 , date_col

FROM table USE INDEX (device_date_col)

WHERE

device_id = "some_value"

AND date_col BETWEEN "2020-03-16 00:00:00" and "2020-04-16 00:00:00"

limit 1000000 ;

but it takes 30 to 60 seconds to finally returns the result, when running first time. And then it returns result under 10 seconds. And another problem is that when I change the device_id it again takes long time. I cannot understand why it's happening beside using proper indexing.

We know that, API Gateway has 30 seconds limit due to this our API encounter timeout. It happens suddenly from today.

Main goal is to retrieve minutely data, it returns less data but also takes long time i.e

....

AND col1 IS NOT NULL

GROUP BY

DATE(date_col),

HOUR(date_col),

MINUTE(date_col)

Below are some useful info

- AWS RDS having instance db.m4.large (vCPU 2 and RAM 8GB).

- MySql version 5.6.x

- composite index on date_col and device_col

- using InnoDB

- table has no id field (primary key)

- total rows in table are 7.5 million

- each device has data every 3 seconds

- query return rows around 600k

- explain query shows it is using indexing

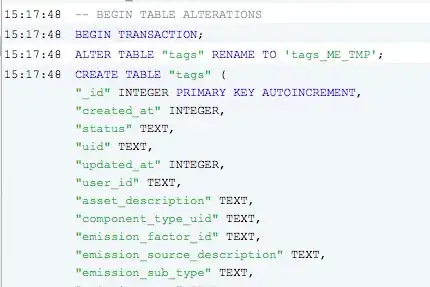

UPDATE

MySql Workbench shows that when I run query without group by it takes 2 seconds to execute but > 30 seconds to retrieve and when I use group by then server takes > 30 to execute but 2 seconds to retrieve.

I think we need to more

CPU for processed data using group byMore RAM for extracting all data (without group by)

Below Image is showing the query response without group by. Look at the duration/Fetch time