I installed stable/prometheus from helm. By default the job_name kubernetes-service-endpoints contains node-exporter and kube-state-metrics as component label. I added the below configuration in prometheus.yml to include namespace, pod and node labels.

- source_labels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

target_label: namespace

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: pod

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_node_name]

separator: ;

regex: (.*)

target_label: node

replacement: $1

action: replace

kube_pod_info{component="kube-state-metrics"} already had namespace, pod and node labels and hence exported_labels were generated. And the metric node_cpu_seconds_total{component="node-exporter"} now correctly has labels namespace, pod and node.

To have these labels correctly, I need to have these 3 labels present in both the above metric names. To achieve that I can override value of exported_labels. I tried adding the below config but to no avail.

- source_labels: [__name__, exported_pod]

regex: "kube_pod_info;(.+)"

target_label: pod

- source_labels: [__name__, exported_namespace]

regex: "kube_pod_info;(.+)"

target_label: namespace

- source_labels: [__name__, exported_node]

regex: "kube_pod_info;(.+)"

target_label: node

Similar approach was mentioned here. I can't see the issue with my piece of code. Any directions to resolve would be very helpful.

Updated - (adding complete job)

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

metric_relabel_configs:

- source_labels: [__name__, exported_pod]

regex: "kube_pod_info;(.+)"

target_label: pod

- source_labels: [__name__, exported_namespace]

regex: "kube_pod_info;(.+)"

target_label: namespace

- source_labels: [__name__, exported_node]

regex: "kube_pod_info;(.+)"

target_label: node

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

regex: (.*)

replacement: $1

separator: ;

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_node_name

target_label: node

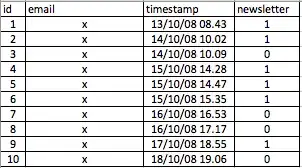

And the result from promql