I am running boosting over a standard dataset (Abalone), using both SAMME and SAMMME.R algorithms of boosting and the graphs that I obtained are not what I was expecting. It is a multi-class classification problem using supervised learning.

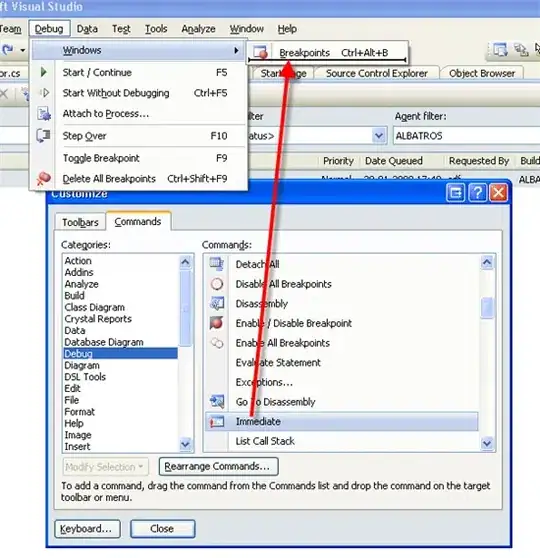

Here is the graph

I was expecting a graph where the error reduces as the number of trees increase.But here the error seems to increase or stay constant. Here is the code for this plot

bdt_real = AdaBoostClassifier( DecisionTreeClassifier(max_depth=2), n_estimators=600, learning_rate=1)

bdt_discrete = AdaBoostClassifier(

DecisionTreeClassifier(max_depth=2),

n_estimators=600,

learning_rate=1.5,

algorithm="SAMME")

bdt_real.fit(X_train, y_train)

bdt_discrete.fit(X_train, y_train)

real_test_errors = []

discrete_test_errors = []

for real_test_predict, discrete_train_predict in zip(

bdt_real.staged_predict(X_test), bdt_discrete.staged_predict(X_test)):

real_test_errors.append(

1. - accuracy_score(real_test_predict, y_test))

discrete_test_errors.append(

1. - accuracy_score(discrete_train_predict, y_test))

n_trees_discrete = len(bdt_discrete)

n_trees_real = len(bdt_real)

# Boosting might terminate early, but the following arrays are always

# n_estimators long. We crop them to the actual number of trees here:

discrete_estimator_errors = bdt_discrete.estimator_errors_[:n_trees_discrete]

real_estimator_errors = bdt_real.estimator_errors_[:n_trees_real]

discrete_estimator_weights = bdt_discrete.estimator_weights_[:n_trees_discrete]

# print discrete_test_errors

# print real_test_errors

plt.figure(figsize=(15, 5))

plt.subplot(131)

plt.plot(range(1, n_trees_discrete + 1),

discrete_test_errors, c='black', label='SAMME')

plt.plot(range(1, n_trees_real + 1),

real_test_errors, c='black',

linestyle='dashed', label='SAMME.R')

plt.legend()

# plt.ylim(0.0, 1.0)

plt.ylabel('Test Error')

plt.xlabel('Number of Trees')

Does anyone have some valid pointers as to what could be the reason for this trend of increasing error with number of trees? I did think this could mean I have overfit the model. But it looks like there is no error reduction at all. For overfitting I expect an error reduction at first and then error increase.