I'm working on a program to solve the Bloch (or more precise the Bloch McConnell) equations in python. So the equation to solve is:

where A is a NxN matrix, A+ its pseudoinverse and M0 and B are vectors of size N.

The special thing is that I wanna solve the equation for several offsets (and thus several matrices A) at the same time. The new dimensions are:

- A: MxNxN

- b: Nx1

- M0: MxNx1

The conventional version of the program (using a loop over the 1st dimension of size M) works fine, but I'm stuck at one point in the 'parallel version'.

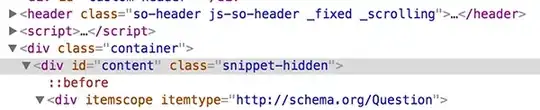

At the moment my code looks like this:

def bmcsim(A, b, M0, timestep):

ex = myexpm(A*timestep) # returns stacked array of size MxNxN

M = np.zeros_like(M0)

for n in range(ex.shape[0]):

A_tmp = A[n,:,:]

A_b = np.linalg.lstsq(A_tmp ,b, rcond=None)[0]

M[n,:,:] = np.abs(np.real(np.dot(ex[n,:,:], M0[n,:,:] + A_b) - A_b))

return M

and I would like to get rid of that for n in range(ex.shape[0]) loop. Unfortunately, np.linalg.lstsq doesn't work for stacked arrays, does it? In myexpm is used np.apply_along_axis for a another problem:

def myexpm(A):

vals,vects = np.linalg.eig(A)

tmp = np.einsum('ijk,ikl->ijl', vects, np.apply_along_axis(np.diag, -1, np.exp(vals)))

return np.einsum('ijk,ikl->ijl', tmp, np.linalg.inv(vects))

However, that just works for 1D input data. Is there something similar that I can use with np.linalg.lstsq? The np.dot in bmcsim will be replaced with np.einsum like in myexpm I guess, or are there better ways?

Thanks for your help!

Update:

I just realized that I can replace np.linalg.lstsq(A,b) with np.linalg.solve(A.T.dot(A), A.T.dot(b)) and managed to get rid of the loop this way:

def bmcsim2(A, b, M0, timestep):

ex = myexpm(A*timestep)

b_stack = np.repeat(b[np.newaxis, :, :], offsets.size, axis=0)

tmp_left = np.einsum('kji,ikl->ijl', np.transpose(A), A)

tmp_right = np.einsum('kji,ikl->ijl', np.transpose(A), b_stack)

A_b_stack = np.linalg.solve(tmp_left , tmp_right )

return np.abs(np.real(np.einsum('ijk,ikl->ijl',ex, M0+A_b_stack ) - A_b_stack ))

This is about 3 times faster, but still a bit complicated. I hope there is a better (shorter/easier) way, that's maybe even faster?!