I have to do an assignment in which I should compare the function solvePnP() used with SOLVEPNP_EPNP and solvePnPRansac() used with SOLVEPNP_ITERATIVE. The goal is to calculate a warped image from an input image.

To do this, I get an RGB input image, the same image as 16bit depth information image, the camera intrinsics and a list of feature match points between the given image and the wanted resulting warped image (which is the same scene from a different perspective.

This is how I went about this task so far:

- Calculate a list of 3D object points form the depth image and the the intrinsics which correspond to the list of feature matches.

- use

solvePnP()andsolvePnPRansac()with the respective algorithms where the calculated 3D object points and the feature match points of the resulting image are the inputs. As a result I get a rotation and a translation vector for both methods. - As sanity check I calculate the average reprojection error using

projectPoints()for all feature match points and comparing the resulting projected points to the feature match points of the resulting image. - Finally I calculate 3D object points for each pixel of the input image and again project them using the rotation and translation vector from before. Each projected point will get the color from the corresponding pixel in the input image resulting in the final warped image.

Using steps described above I get the following output with the Ransac Method:

This looks pretty much like the reference solution I have, so this should be mostly correct.

However with the

This looks pretty much like the reference solution I have, so this should be mostly correct.

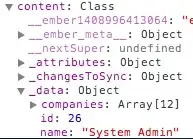

However with the solvePnP() method using SOLVEPNP_EPNP the resulting rotation and translation vectors look like this, which doesn't make sense at all:

================ solvePnP using SOVLEPNP_EPNP results: ===============

Rotation: [-4.3160208e+08; -4.3160208e+08; -4.3160208e+08]

Translation: [-4.3160208e+08; -4.3160208e+08; -4.3160208e+08]

The assignment sheet states, that the list of feature matches contain some miss - matches, so basically outliers. As far as I know, Ransac handles outliers better, however can this be the reason for this weird results for the other method? I was expecting some anomalies, but this is completely wrong and the resulting image is completely black since no points are inside the image area.

Maybe someone can point me into the right direction.