I have been using the following piece of code to print the lr_t learning_rate in Adam() optimizer for my trainable_model.

if(np.random.uniform()*100 < 3 and self.training):

model = self.trainable_model

_lr = tf.to_float(model.optimizer.lr, name='ToFloat')

_decay = tf.to_float(model.optimizer.decay, name='ToFloat')

_beta1 = tf.to_float(model.optimizer.beta_1, name='ToFloat')

_beta2 = tf.to_float(model.optimizer.beta_2, name='ToFloat')

_iterations = tf.to_float(model.optimizer.iterations, name='ToFloat')

t = K.cast(_iterations, K.floatx()) + 1

_lr_t = lr * (K.sqrt(1. - K.pow(_beta2, t)) / (1. - K.pow(_beta1, t)))

print(" - LR_T: "+str(K.eval(_lr_t)))

What I don't understand is that this learning rate increases. (with decay at default value of 0).

If we look at the learning_rate equation in Adam, we find this:

lr_t = lr * (K.sqrt(1. - K.pow(self.beta_2, t)) /

(1. - K.pow(self.beta_1, t)))

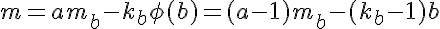

which corresponds to the equation (with default values for parameters):

= 0.001*sqrt(1-0.999^x)/(1-0.99^x)

If we print this equation we obtain :

which clearly shows that the learning_rate is increasing exponentially over time (since t starts at 1)

can someone explain why this is the case ? I have read everywhere that we should use a learning_rate that decays over time, not increase.

Does it means that my neural network makes bigger updates over time as Adam's learning_rate increases ?