I have a tensor A with size [batchSize,2,2,2] where batchSize is a placeholder. In a custom layer, I would like to map each value of this tensor to the closest value in a list c with length n. The list is my codebook and I would like to quantize each value in the tensor based on this codebook; i.e. find the closest value to each tensor value in the list and replace the tensor value with that.

I could not figure out a 'clean' tensor operation that will quickly do that. I can not loop over the batchSize. Is there a method to do this in Tensorflow?

Asked

Active

Viewed 687 times

0

deepsy

- 15

- 2

-

Can you give an example of your codebook? Maybe you can use `https://www.tensorflow.org/api_docs/python/tf/quantization/quantize` if it is a standard min-max quantization. Or if you have some key-vale pairs, you might first do some normalization then perform a key/value lookup via a `tf.contrib.lookup.HashTable`. – greeness May 27 '19 at 09:20

-

@greeness Thank you for your reply. `tf.quantization.quantize` does not work for me since my quantization values are non-uniform. I think hash table is not suitable for me either since I randomly draw the values of tensor `A` from Gaussian distribution. Codebook `c` vector includes the non-uniform quantized values from the Gaussian distribution with length `100`. As a result, I'm mapping the values drawn randomly from the continuous distribution to quantized values. – deepsy May 27 '19 at 18:48

1 Answers

0

If I understand correctly, this is doable with tf.HashTable. As an illustration, I used a normal distribution with mean=0, stddev=4.

a = tf.random.normal(

shape = [batch, 2, 2, 2],

mean=0.0,

stddev=4

)

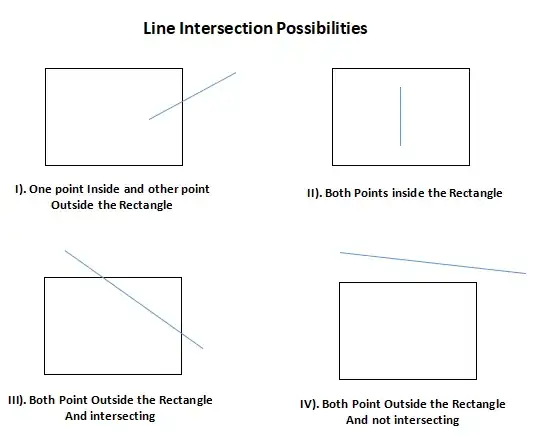

And I used a quantization with only 5 buckets (see the figure marked with number 0, 1, 2, 3, 4). This is extensible to any length n. Note I intentionally made the buckets have variable length.

My codebook is therefore:

a <= -2 -> bucket 4

-2 < a < -0.5 -> bucket 3

-0.5 <= a < 0.5 -> bucket 0

0.5 <= a < 2.5 -> bucket 1

a >= 2.5 -> bucket 2

The idea is to pre-create a key/value mapping from a scaled a to the bucket number. (the number of <key,value> pairs is dependent on the input granularity you need. Here I scaled by 10). Below is the code to initialize the mapping table and the produced mapping (input scaled by 10).

# The boundary is chosen based on that we clip by min=-4, max=4.

# after scaling, the boundary becomes -40 and 40.

keys = range(-40, 41)

values = []

for k in keys:

if k <= -20:

values.append(4)

elif k < -5:

values.append(3)

elif k < 5:

values.append(0)

elif k < 25:

values.append(1)

else:

values.append(2)

for (k, v) in zip(keys, values):

print ("%2d -> %2d" % (k, v))

-40 -> 4

-39 -> 4

...

-22 -> 4

-21 -> 4

-20 -> 4

-19 -> 3

-18 -> 3

...

-7 -> 3

-6 -> 3

-5 -> 0

-4 -> 0

...

3 -> 0

4 -> 0

5 -> 1

6 -> 1

...

23 -> 1

24 -> 1

25 -> 2

26 -> 2

...

40 -> 2

batch = 3

a = tf.random.normal(

shape = [batch, 2, 2, 2],

mean=0.0,

stddev=4,

dtype=tf.dtypes.float32

)

clip_a = tf.clip_by_value(a, clip_value_min=-4, clip_value_max=4)

SCALE = 10

scaled_clip_a = tf.cast(clip_a * SCALE, tf.int32)

table = tf.contrib.lookup.HashTable(

tf.contrib.lookup.KeyValueTensorInitializer(keys, values), -1)

quantized_a = tf.reshape(

table.lookup(tf.reshape(scaled_clip_a, [-1])),

[batch, 2, 2, 2])

with tf.Session() as sess:

table.init.run()

a, clip_a, scaled_clip_a, quantized_a = sess.run([a, clip_a, scaled_clip_a, quantized_a])

print ('a\n%s' % a)

print ('clip_a\n%s' % clip_a)

print ('scaled_clip_a\n%s' % scaled_clip_a)

print ('quantized_a\n%s' % quantized_a)

Result:

a

[[[[-0.26980758 -5.56331968]

[ 5.04240322 -7.18292665]]

[[-7.11545467 -3.24369478]

[ 1.01861215 -0.04510783]]]

[[[-0.28768024 0.2472897 ]

[ 2.17780781 -5.79106379]]

[[ 8.45582008 4.53902292]

[ 0.138162 -6.19155598]]]

[[[-7.5134449 4.56302166]

[-0.30592337 -0.60313278]]

[[-0.06204566 3.42917275]

[-1.14547718 3.31167102]]]]

clip_a

[[[[-0.26980758 -4. ]

[ 4. -4. ]]

[[-4. -3.24369478]

[ 1.01861215 -0.04510783]]]

[[[-0.28768024 0.2472897 ]

[ 2.17780781 -4. ]]

[[ 4. 4. ]

[ 0.138162 -4. ]]]

[[[-4. 4. ]

[-0.30592337 -0.60313278]]

[[-0.06204566 3.42917275]

[-1.14547718 3.31167102]]]]

scaled_clip_a

[[[[ -2 -40]

[ 40 -40]]

[[-40 -32]

[ 10 0]]]

[[[ -2 2]

[ 21 -40]]

[[ 40 40]

[ 1 -40]]]

[[[-40 40]

[ -3 -6]]

[[ 0 34]

[-11 33]]]]

quantized_a

[[[[0 4]

[2 4]]

[[4 4]

[1 0]]]

[[[0 0]

[1 4]]

[[2 2]

[0 4]]]

[[[4 2]

[0 3]]

[[0 2]

[3 2]]]]

greeness

- 15,956

- 5

- 50

- 80