I recently learned in class the Principle Component Analysis method aims to approximate a matrix X to a multiplication of two matrices Z*W. If X is a n x d matrix, Z is a n x k matrix and W is a k x d matrix. In that case, the objective function the PCA tries to minimize is this. (w^j means the j_th column of W, z_i means the i_th row of Z)

In this case it is easy to calculate the gradient of f, with respect to W and Z.

However, instead of using the L2 norm as above, I have to use the L1 norm, like the following equation, and use gradient descent to find the ideal Z and W.

In order to differentiate it, I approximated the absolute function as follows (epsilon is a very small value).

However, when I tried to calculate the gradient matrix with respect to W of this objective function, I derived an equation as follows.

I tried to make the gradient matrix elementwise, but it takes too long if the size of X is big.

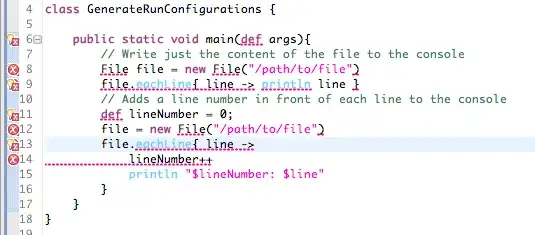

g = np.zeros((k, d))

for i in range(k):

for j in range(d):

for k2 in range(n):

temp = np.dot(W[:,j], Z[k2,:])-X[k2, j]

g[i, j] += (temp * Z[k2, i])/np.sqrt(temp*temp + 0.0001)

g = g.transpose()

Is there any way I can make this code faster? I feel like there is a way to make the equation way more simple, but with the square root inside I am completely lost. Any help would be appreciated!