Reading questions\answers of other people by tag [python] I faced an amazing work by Banach Tarski TensorFlow Object Detection API Weird Behavior . So, I wanted to retry out what he did to more deeply understand Tensorflow Object Detection API. I followed step by step what he did as well as I was using Grocery Dataset. The faster_rcnn_resnet101 model was taken with default parameters and batch_size = 1.

The real difference was that I took not Shelf_Images with annotations and bbs for each class on them but Product_Images where there were 10 folders (for each for one class) and in each folder you could see full size images of cigarettes without any background. Avg size of Product_Images is 600*1200 whilst Shelf_Images is 3900*2100. So, I thought why I just can't take these full images and take bounding boxes out of them, then train on it and get successful result. By the way I didn't need to manually crop images as Banach Tarski did because of 600*1200 is so great fit for faster_rcnn_resnet101 neural network model and its default parameters of input images.

Example one of the images out of class Pall Mall

It seemed simple because I could create bboxes just by image's contours. So, I just needed to create annotations for each image and create tf_records out of them for training. I took the formula for creating bboxes by image contours

x_min = str(1)

y_min = str(1)

x_max = str(img.width - 10)

y_max = str(img.height - 10)

Example of xml annotation

<annotation>

<folder>VOC2007</folder>

<filename>B1_N1.jpg</filename>

<path>/.../grocery-detection/data/images/1/B1_N1.jpg</path>

<source>

<database>The VOC2007 Database</database>

<annotation>PASCAL VOC2007</annotation>

<image>flickr</image>

<flickrid>192073981</flickrid>

</source>

<owner>

<flickrid>tobeng</flickrid>

<name>?</name>

</owner>

<size>

<width>811</width>

<height>1274</height>

<depth>3</depth>

</size>

<segmented>0</segmented>

<object>

<name>1</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<difficult>0</difficult>

<bndbox>

<xmin>1</xmin>

<ymin>1</ymin>

<xmax>801</xmax>

<ymax>1264</ymax>

</bndbox>

</object>

</annotation>

After script iterating over all folder images I've got for each image annotation similar what I showed above in VOC2007 xml type. Then I created tf_records iterating over each annotation, taking it as it was in pet_running example done by tensorflow and all seemed great now and ready for training on AWS Nvidia Tesla k80

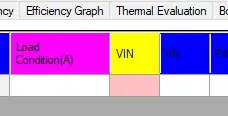

Example of feature_dict that is used for creating Tf_records

feature_dict = {

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/source_id': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/key/sha256': dataset_util.bytes_feature(key.encode('utf8')),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature('jpeg'.encode('utf8')),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

'image/object/difficult': dataset_util.int64_list_feature(difficult_obj),

'image/object/truncated': dataset_util.int64_list_feature(truncated),

'image/object/view': dataset_util.bytes_list_feature(poses),

}

After 12458 steps by 1 image per step the model converged to a local minima. I saved all checkpoints and graph. Next I created out of it inference graph and run object_detection_tutorial.py to show how it all works on my test images. But I'm not happy with the result at all. P.S the last one image has 1024 × 760 size and also cropped as top part of 3rd image that has 3264 × 2448. So I tried different size images of cigarettes to accidantly not to lose image details during image scaling by model.

Output: classified images with predicted bboxes