Important : The raw kinect RGB image has a distortion. Remove it first.

Short answer

The "transformation matrix" you are searching is called projection matrix.

rgb.cx:959.5

rgb.cy:539.5

rgb.fx:1081.37

rgb.fy:1081.37

Long answer

First understand how color image is generated in Kinect.

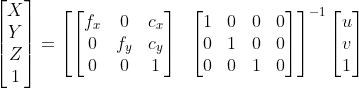

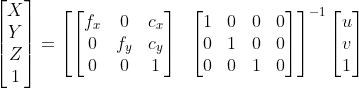

X, Y, Z : coordinates of the given point in a coordinate space where kinect sensor is consider as the origin. AKA camera space. Note that camera space is 3D.

u, v : Coordinates of the corresponding color pixel in color space. Note that color space is 2D.

fx , fy : Focal length

cx, cy : principal points (you can consider the principal points of the kinect RGB camera as the center of image)

(R|t) : Extrinsic camera matrix. In kinect this one you can consider as (I|0) where I is identity matrix.

s : scaler value. you can set it to 1.

To get the most accurate values for the fx , fy, cx, cy, you need to calibrate your rgb camera in kinect using a chess board.

The above fx , fy, cx, cy values are my own calibration of my kinect. These values are differ from one kinect to another in very small margin.

More info and implementaion

All Kinect camera matrix

Distort

Registration

I implemented the Registration process in CUDA since CPU is not fast enough to process that much of data (1920 x 1080 x 30 matrix calculations per second) in real-time.