I've come upon an unexpected error when multiprocessing with numpy arrays of different data types. First, I perform multiprocessing with numpy arrays of type int64 and run it again with numpy arrays of type float64. The int64 runs as expected whereas the float64 uses all available processors (more than I've allocated) and results in a slower computation than using a single core.

The following example reproduces the problem:

def array_multiplication(arr):

new_arr = arr.copy()

for nnn in range(3):

new_arr = np.dot(new_arr, arr)

return new_arr

if __name__ == '__main__':

from multiprocessing import Pool

import numpy as np

from timeit import timeit

# Example integer arrays.

test_arr_1 = np.random.randint(100, size=(100, 100))

test_arr_2 = np.random.randint(100, size=(100, 100))

test_arr_3 = np.random.randint(100, size=(100, 100))

test_arr_4 = np.random.randint(100, size=(100, 100))

# Parameter array.

parameter_arr = [test_arr_1, test_arr_2, test_arr_3, test_arr_4]

pool = Pool(processes=len(parameter_arr))

print('Multiprocessing time:')

print(timeit(lambda: pool.map(array_multiplication, parameter_arr),

number=1000))

print('Series time:')

print(timeit(lambda: list(map(array_multiplication, parameter_arr)),

number=1000))

will yield

Multiprocessing speed:

4.1271785919998365

Series speed:

8.102764352000122

which is an expected speed-up.

However, replacing test_arr_n with

test_arr_1 = np.random.normal(50, 30, size=(100, 100))

test_arr_2 = np.random.normal(50, 30, size=(100, 100))

test_arr_3 = np.random.normal(50, 30, size=(100, 100))

test_arr_4 = np.random.normal(50, 30, size=(100, 100))

results in

Multiprocessing time:

2.379720258999896

Series time:

0.40820308100001057

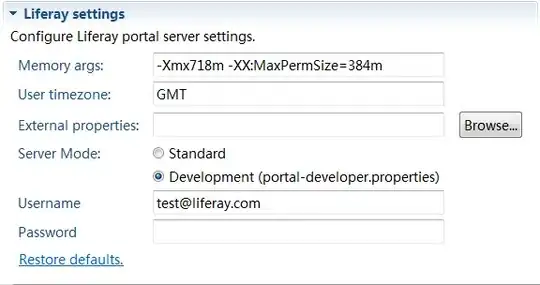

in addition to using up all available processors, where I've specified 4. Below are screen grabs of the processor usage when running the first case (int64) and second case (float64).

Above is the int64 case, where four processors are given tasks followed by one processor computing the task in series.

However, in the float64 case, all processors are being used, even though the specified number is the number of test_arr's - that is, 4.

I have tried this for a number of array size magnitudes and number of iterations in the for loop in array_multiplicationand the behaviour is the same. I'm running Ubuntu 16.04 LTS with 62.8 GB of memory and an i7-6800k 3.40GHz CPU.

Why is this happening? Thanks in advance.