I need to implement a batch job that splits an XML, processes the parts and aggregates them afterwards. The aggregate needs to be processed further.

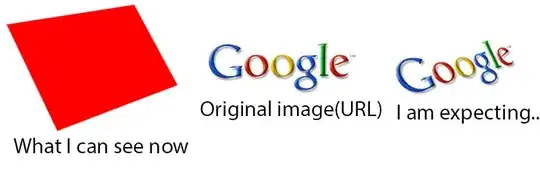

The processing of account parts is really expensive. In the following image the processing part takes action for each account of a person (the number of accounts of a person varies btw).

Sample input file:

<?xml version="1.0" encoding="UTF-8"?>

<Persons>

<Person>

<Name>Max Mustermann</Name>

<Accounts>

<Account>maxmustermann</Account>

<Account>esel</Account>

<Account>affe</Account>

</Accounts>

</Person>

<Person>

<Name>Petra Pux</Name>

<Accounts>

<Account>petty</Account>

<Account>petra</Account>

</Accounts>

</Person>

<Person>

<Name>Einsiedler Bob</Name>

<Accounts>

<Account>bob</Account>

</Accounts>

</Person>

</Persons>

For each Account of each Person do the following: Invoke a REST service, say for instance

GET /account/{person}/{account}/logins

As result invoke a rest service for each Person containing the aggregated logins-xml:

POST /analysis/logins/{person}

<Person>

<Name>Max Mustermann</Name>

<Accounts>

<Account>

<LoginCount>22</LoginCount>

<Name>maxmustermann</Name>

</Account>

<Account>

<LoginCount>42</LoginCount>

<Name>esel</Name>

</Account>

<Account>

<LoginCount>13</LoginCount>

<Name>affe</Name>

</Account>

</Accounts>

</Person>

I don't have any influence on the APIs, so i need to update person bundles.

How could I realize the parallelization and therefore structure my spring batch application?

I've found some starting points, but none of them was really satisfying.

Should I process the account data in one step, return an item belonging to every account and poll in the next step for each item and aggregate those or should I implement parallelization in the account step and aggregate it inside this step, introducing a next step for further processing?

Problem with first approach: how should I know all items have arrived to start aggregation?

Problem with (or rather question about) second approach: is it common to realize parallelization manually (like for instance Java Futures) instead of leaving it to spring batch?

What's the Spring Batch kind of way?

Thanks, Thomas