I'm extracting still frames from a video using the basic code:

ffmpeg -i video.MXF -vf fps=1 output_%04d.png

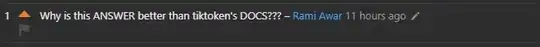

In some videos, this yields images that, when the camera was moving, look much blurrier than when watching the video (see example below). The still frames from when the camera was not moving look sharper (closer to how it looks in video playback).

The video specs are: mpeg2video, yuv422p, 1280x720 (according to FFprobe).

Is this inherent within the video coding or structure? The video looks so nice when in motion, but even when I pause in VLC the frame goes from sharp to blurred.

Are there any additions to my FFmpeg code that could result in sharper images? I tried adding a yadif filter, but it made no difference (the video isn't interlaced anyways).

Unfortunately I can't post a video sample online, but below is an example of a sharper image and a blurry image; both look in focus during video playback and are about a second apart in the video (that's the same orange sea star on the left side).