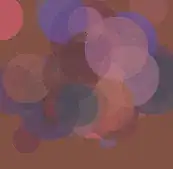

I have the histogram of my input data (in black) given in the following graph:

I'm trying to fit the Gamma distribution but not on the whole data but just to the first curve of the histogram (the first mode). The green plot in the previous graph corresponds to when I fitted the Gamma distribution on all the samples using the following python code which makes use of scipy.stats.gamma:

img = IO.read(input_file)

data = img.flatten() + abs(np.min(img)) + 1

# calculate dB positive image

img_db = 10 * np.log10(img)

img_db_pos = img_db + abs(np.min(img_db))

data = img_db_pos.flatten() + 1

# data histogram

n, bins, patches = plt.hist(data, 1000, normed=True)

# slice histogram here

# estimation of the parameters of the gamma distribution

fit_alpha, fit_loc, fit_beta = gamma.fit(data, floc=0)

x = np.linspace(0, 100)

y = gamma.pdf(x, fit_alpha, fit_loc, fit_beta)

print '(alpha, beta): (%f, %f)' % (fit_alpha, fit_beta)

# plot estimated model

plt.plot(x, y, linewidth=2, color='g')

plt.show()

How can I restrict the fitting only to the interesting subset of this data?

Update1 (slicing):

I sliced the input data by keeping only values below the max of the previous histogram, but the results were not really convincing:

This was achieved by inserting the following code below the # slice histogram here comment in the previous code:

max_data = bins[np.argmax(n)]

data = data[data < max_data]

Update2 (scipy.optimize.minimize):

The code below shows how scipy.optimize.minimize() is used to minimize an energy function to find (alpha, beta):

import matplotlib.pyplot as plt

import numpy as np

from geotiff.io import IO

from scipy.stats import gamma

from scipy.optimize import minimize

def truncated_gamma(x, max_data, alpha, beta):

gammapdf = gamma.pdf(x, alpha, loc=0, scale=beta)

norm = gamma.cdf(max_data, alpha, loc=0, scale=beta)

return np.where(x < max_data, gammapdf / norm, 0)

# read image

img = IO.read(input_file)

# calculate dB positive image

img_db = 10 * np.log10(img)

img_db_pos = img_db + abs(np.min(img_db))

data = img_db_pos.flatten() + 1

# data histogram

n, bins = np.histogram(data, 100, normed=True)

# using minimize on a slice data below max of histogram

max_data = bins[np.argmax(n)]

data = data[data < max_data]

data = np.random.choice(data, 1000)

energy = lambda p: -np.sum(np.log(truncated_gamma(data, max_data, *p)))

initial_guess = [np.mean(data), 2.]

o = minimize(energy, initial_guess, method='SLSQP')

fit_alpha, fit_beta = o.x

# plot data histogram and model

x = np.linspace(0, 100)

y = gamma.pdf(x, fit_alpha, 0, fit_beta)

plt.hist(data, 30, normed=True)

plt.plot(x, y, linewidth=2, color='g')

plt.show()

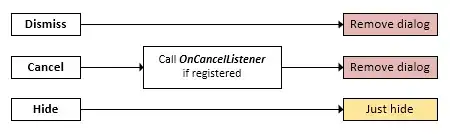

The algorithm above converged for a subset of data, and the output in o was:

x: array([ 16.66912781, 6.88105559])

But as can be seen on the screenshot below, the gamma plot doesn't fit the histogram: