I'm new to scikit-lear and GMM in general... I have some problem with the fit quality of a Gaussian Mixture Model in python (scikit-learn) .

I have an array of data, which you may find at DATA HERE that I want to fit with a GMM with n = 2 components.

As benchmark I superimpose a Normal fit.

Errors/weirdness:

- setting n = 1 components, I cannot recover with GMM(1) the Normal benchmark fit

- setting n = 2 components, the Normal fit is better than GMM(2) fit

- GMM(n) seems to provide always the same fit...

Here is what I get: what I'm doing wrong here? (the picture displays the fits with GMM(2)). Thanks in advance for your help.

Code below (to run it, save data in the same folder)

from numpy import *

import pandas as pd

import matplotlib.pyplot as plt

from datetime import datetime

from collections import OrderedDict

from scipy.stats import norm

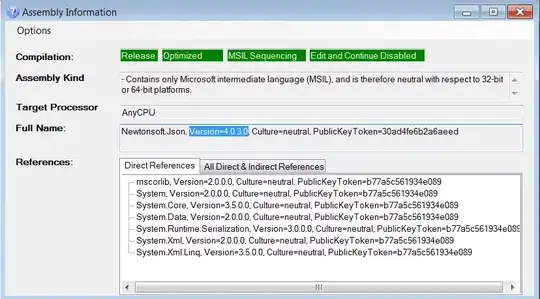

from sklearn.mixture import GMM

# Upload the data: "epsi" (array of floats)

file_xlsx = './db_X.xlsx'

data = pd.read_excel(file_xlsx)

epsi = data["epsi"].values;

t_ = len(epsi);

# Normal fit (for benchmark)

epsi_grid = arange(min(epsi),max(epsi)+0.001,0.001);

mu = mean(epsi);

sigma2 = var(epsi);

normal = norm.pdf(epsi_grid, mu, sqrt(sigma2));

# TENTATIVE - Gaussian mixture fit

gmm = GMM(n_components = 2); # fit quality doesn't improve if I set: covariance_type = 'full'

gmm.fit(reshape(epsi,(t_,1)));

gauss_mixt = exp(gmm.score(reshape(epsi_grid,(len(epsi_grid),1))));

# same result if I apply the definition of pdf of a Gaussian mixture:

# pdf_mixture = w_1 * N(mu_1, sigma_1) + w_2 * N(mu_2, sigma_2)

# as suggested in:

# http://stackoverflow.com/questions/24878729/how-to-construct-and-plot-uni-variate-gaussian-mixture-using-its-parameters-in-p

#

#gauss_mixt = array([p * norm.pdf(epsi_grid, mu, sd) for mu, sd, p in zip(gmm.means_.flatten(), sqrt(gmm.covars_.flatten()), gmm.weights_)]);

#gauss_mixt = sum(gauss_mixt, axis = 0);

# Create a figure showing the comparison between the estimated distributions

# setting the figure object

fig = plt.figure(figsize = (10,8))

fig.set_facecolor('white')

ax = plt.subplot(111)

# colors

red = [0.9, 0.3, 0.0];

grey = [0.9, 0.9, 0.9];

green = [0.2, 0.6, 0.3];

# x-axis limits

q_inf = float(pd.DataFrame(epsi).quantile(0.0025));

q_sup = float(pd.DataFrame(epsi).quantile(0.9975));

ax.set_xlim([q_inf, q_sup])

# empirical pdf of data

nb = int(10*log(t_));

ax.hist(epsi, bins = nb, normed = True, color = grey, edgecolor = 'k', label = "Empirical");

# Normal fit

ax.plot(epsi_grid, normal, color = green, lw = 1.0, label = "Normal fit");

# Gaussian Mixture fit

ax.plot(epsi_grid, gauss_mixt, color = red, lw = 1.0, label = "GMM(2)");

# title

ax.set_title("Issue: Normal fit out-performs the GMM fit?", size = 14)

# legend

ax.legend(loc='upper left');

plt.tight_layout()

plt.show()