I am using PyTesser to break a captcha. PyTesser uses tesseract python ocr library. Before putting image to PyTesser, I use some filtering. Step by step my code:

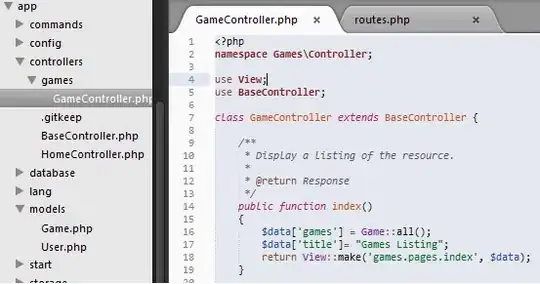

input image is:

from PIL import Image

img = Image.open('1.gif')

img = img.convert("RGBA")

pixdata = img.load()

# Clean the background noise, if color != black, then set to white.

for y in xrange(img.size[1]):

for x in xrange(img.size[0]):

if pixdata[x, y][0] < 90:

pixdata[x, y] = (0, 0, 0, 255)

for y in xrange(img.size[1]):

for x in xrange(img.size[0]):

if pixdata[x, y][2] < 136:

pixdata[x, y] = (0, 0, 0, 255)

for y in xrange(img.size[1]):

for x in xrange(img.size[0]):

if pixdata[x, y][3] > 0:

pixdata[x, y] = (255, 255, 255, 255)

img.save("input-black.gif", "GIF")

After applying this code output is:

Now,

im_orig = Image.open('input-black.gif')

big = im_orig.resize((116, 56), Image.NEAREST)

ext = ".tif"

big.save("input-NEAREST" + ext)

After this code snippet output image is:

And finally when I apply this

from pytesser import *

image = Image.open('input-NEAREST.tif')

print image_to_string(image)

I am getting output %/ww

Please help me to find correct result.

If I try with these images, this code can successfully recognize letters.