I understood how belief states are updated in POMDP. But in Policy and Value function section, in http://en.wikipedia.org/wiki/Partially_observable_Markov_decision_process I could not figure out how to calculate value of V*(T(b,a,o)) for finding optimal value function V*(b). I have read a lot of resources on the internet but none explain how to calculate this clearly. Can some one provide me with a mathematically solved example with all the calculations or provide me with a mathematically clear explanation.

2 Answers

You should check out this tutorial on POMDPs:

http://cs.brown.edu/research/ai/pomdp/tutorial/index.html

It includes a section about Value Iteration, which can be used to find an optimal policy/value function.

- 28,410

- 9

- 72

- 124

-

The link proves good explanation of how value iteration works. But it does not give enough details on how the value is calculated. It assumes already a value is computed for a given action - observation and does not provide details on how we get that value in that belief state. – Bugs Bunny Oct 25 '14 at 22:06

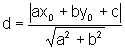

I try to use the same notation in this answer as Wikipedia. First I repeat the Value Function as stated on Wikipedia:

V*(b) is the value function with the belief b as parameter. b contains the probability of all states s, which sum up to 1:

r(b,a) is the reward for belief b and action a which has to be calculated using the belief over each state given the original reward function R(s,a): the reward for being in state s and having done action a.

We can also write the function O in terms of states instead of belief b:

this is the probability of having observation o given a belief b and action a. Note that O and T are probability functions.

Finally the function τ(b,a,o) gives the new belief state b'=τ(b,a,o) given the previous belief b, action a and observation o. Per state we can calculate the new probability:

Now the new belief b' can be used to calculate iteratively: V(τ(b,a,o)).

The optimal value function can be approached by using for example Value Iteration which applies dynamic programming. Then the function is iteratively updated until the difference is smaller then a small value ε.

There is a lot more information on POMDPs, for example:

- Sebastian Thrun, Wolfram Burgard, and Dieter Fox. 2005. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents). The MIT Press.

- A brief introduction to reinforcement learning

- A POMDP Tutorial

- Reinforcement Learning and Markov Decision Processes

- 6,140

- 9

- 38

- 54