I'm currently working on an application which displays many images one after another. I don't have the luxury of using video for this unfortunately, however, I can choose the image codec in use. The data is sent from a server to the application, already encoded.

If I use PNG or JPEG for example I can convert the data I receive into a UIImage using [[UIImage alloc] initWithData:some_data]. When I use a raw byte array, or another custom codec which has to decode to a raw byte array first, I have to create a bitmap context, then use CGBitmapContextCreateImage(bitmapContext) which gives a CGImageRef, which then is fed into [[UIImage alloc] initWithImage:cg_image]. This is much slower.

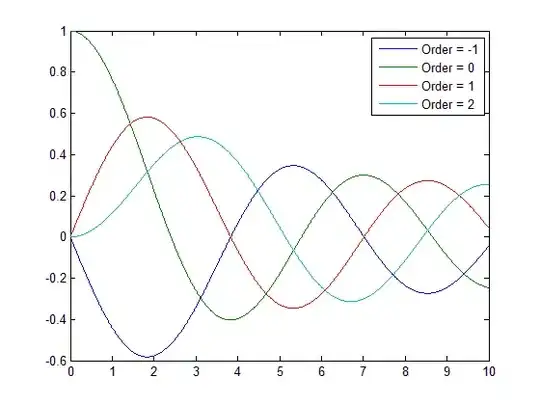

The above chart (time is measured in seconds) is the time it takes to perform the conversion from NSData to UIImage. PNG, JPEG, BMP, and GIF are all approximately the same. Null is simply not bothering with the conversion and returning nil instead. Raw is a raw RGBA byte array which is converted using the bitmap context method. The custom one decompresses into a Raw format and then does the same thing. LZ4 is the raw data, compressed using the LZ4 algorithm and so it also runs through the bitmap context method.

PNG images for example, are simply bitmapped images which are compressed. This decompression and then render takes less time than my render for Raw images. iOS must be doing something behind the scenes to make this faster.

If we look at the chart of how long it takes to convert each type as well as how long it takes to draw (to a graphics context) we get the following:

We can see that most images take very different times to convert, but are fairly similar in drawing times. This rules out any performance boost of UIImage being lazy and converting only when needed.

My question is essentially: is the faster speeds for well known codecs something I can exploit? Or, if not, is there another way I can render my Raw data faster?

Edit: For the record, I am drawing these images on top of another UIImage whenever I get a new one. It may be that there is an alternative which is faster which I am willing to look into. However, OpenGL is not an option unfortunately.

Further edit: This question is fairly important and I would like the best possible answer. The bounty will not be awarded until the time expires to ensure the best possible answers are given.

Final edit: My question was why isn't decompressing and drawing a raw RGBA array faster than drawing a PNG for example since PNG has to decompress to a RGBA array and then draw. The results are that it is in fact faster. However, this only appears to be the case in release builds. Debug builds are not optimised for this, but the UIImage code which runs behind the scenes clearly is. By compiling as a release build RGBA array images were much faster than other codecs.