I've been trying to implement Morph Target animation in OpenGL with Facial Blendshapes but following this tutorial. The vertex shader for the animation looks something like this:

#version 400 core

in vec3 vNeutral;

in vec3 vSmile_L;

in vec3 nNeutral;

in vec3 nSmile_L;

in vec3 vSmile_R;

in vec3 nSmile_R;

uniform float left;

uniform float right;

uniform float top;

uniform float bottom;

uniform float near;

uniform float far;

uniform vec3 cameraPosition;

uniform vec3 lookAtPosition;

uniform vec3 upVector;

uniform vec4 lightPosition;

out vec3 lPos;

out vec3 vPos;

out vec3 vNorm;

uniform vec3 pos;

uniform vec3 size;

uniform mat4 quaternion;

uniform float smile_w;

void main(){

//float smile_l_w = 0.9;

float neutral_w = 1 - 2 * smile_w;

clamp(neutral_w, 0.0, 1.0);

vec3 vPosition = neutral_w * vNeutral + smile_w * vSmile_L + smile_w * vSmile_R;

vec3 vNormal = neutral_w * nNeutral + smile_w * nSmile_L + smile_w * nSmile_R;

//vec3 vPosition = vNeutral + (vSmile_L - vNeutral) * smile_w;

//vec3 vNormal = nNeutral + (nSmile_L - nNeutral) * smile_w;

normalize(vPosition);

normalize(vNormal);

mat4 translate = mat4(1.0, 0.0, 0.0, 0.0,

0.0, 1.0, 0.0, 0.0,

0.0, 0.0, 1.0, 0.0,

pos.x, pos.y, pos.z, 1.0);

mat4 scale = mat4(size.x, 0.0, 0.0, 0.0,

0.0, size.y, 0.0, 0.0,

0.0, 0.0, size.z, 0.0,

0.0, 0.0, 0.0, 1.0);

mat4 model = translate * scale * quaternion;

vec3 n = normalize(cameraPosition - lookAtPosition);

vec3 u = normalize(cross(upVector, n));

vec3 v = cross(n, u);

mat4 view=mat4(u.x,v.x,n.x,0,

u.y,v.y,n.y,0,

u.z,v.z,n.z,0,

dot(-u,cameraPosition),dot(-v,cameraPosition),dot(-n,cameraPosition),1);

mat4 modelView = view * model;

float p11=((2.0*near)/(right-left));

float p31=((right+left)/(right-left));

float p22=((2.0*near)/(top-bottom));

float p32=((top+bottom)/(top-bottom));

float p33=-((far+near)/(far-near));

float p43=-((2.0*far*near)/(far-near));

mat4 projection = mat4(p11, 0, 0, 0,

0, p22, 0, 0,

p31, p32, p33, -1,

0, 0, p43, 0);

//lighting calculation

vec4 vertexInEye = modelView * vec4(vPosition, 1.0);

vec4 lightInEye = view * lightPosition;

vec4 normalInEye = normalize(modelView * vec4(vNormal, 0.0));

lPos = lightInEye.xyz;

vPos = vertexInEye.xyz;

vNorm = normalInEye.xyz;

gl_Position = projection * modelView * vec4(vPosition, 1.0);

}

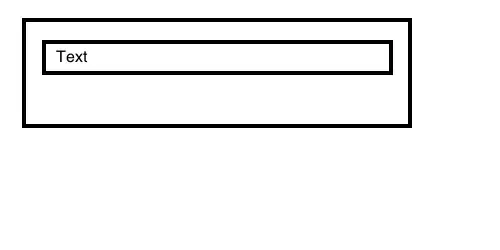

Although the algorithm for morph target animation works, I get missing faces on the final calculated blend shape. The animation somewhat looks like the follow gif.

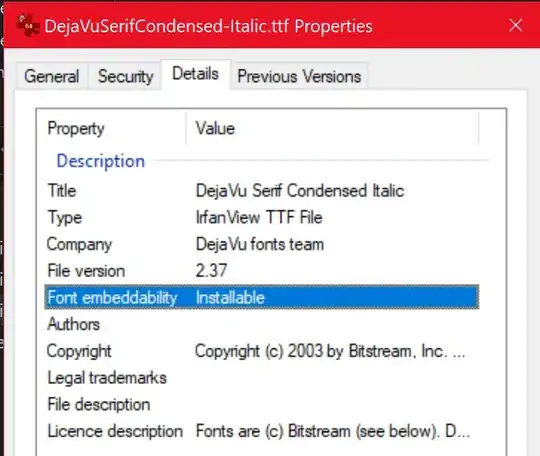

The blendshapes are exported from a markerless facial animation software known as FaceShift.

But also, the algorithm works perfectly on a normal cuboid with it's twisted blend shape created in Blender:

Could it something wrong with the blendshapes I am using for the facial animation? Or I am doing something wrong in the vertex shader?

--------------------------------------------------------------Update----------------------------------------------------------

So as suggested, I made the changes required to the vertex shader, and made a new animation, and still I am getting the same results.

Here's the updated vertex shader code:

#version 400 core

in vec3 vNeutral;

in vec3 vSmile_L;

in vec3 nNeutral;

in vec3 nSmile_L;

in vec3 vSmile_R;

in vec3 nSmile_R;

uniform float left;

uniform float right;

uniform float top;

uniform float bottom;

uniform float near;

uniform float far;

uniform vec3 cameraPosition;

uniform vec3 lookAtPosition;

uniform vec3 upVector;

uniform vec4 lightPosition;

out vec3 lPos;

out vec3 vPos;

out vec3 vNorm;

uniform vec3 pos;

uniform vec3 size;

uniform mat4 quaternion;

uniform float smile_w;

void main(){

float neutral_w = 1.0 - smile_w;

float neutral_f = clamp(neutral_w, 0.0, 1.0);

vec3 vPosition = neutral_f * vNeutral + smile_w/2 * vSmile_L + smile_w/2 * vSmile_R;

vec3 vNormal = neutral_f * nNeutral + smile_w/2 * nSmile_L + smile_w/2 * nSmile_R;

mat4 translate = mat4(1.0, 0.0, 0.0, 0.0,

0.0, 1.0, 0.0, 0.0,

0.0, 0.0, 1.0, 0.0,

pos.x, pos.y, pos.z, 1.0);

mat4 scale = mat4(size.x, 0.0, 0.0, 0.0,

0.0, size.y, 0.0, 0.0,

0.0, 0.0, size.z, 0.0,

0.0, 0.0, 0.0, 1.0);

mat4 model = translate * scale * quaternion;

vec3 n = normalize(cameraPosition - lookAtPosition);

vec3 u = normalize(cross(upVector, n));

vec3 v = cross(n, u);

mat4 view=mat4(u.x,v.x,n.x,0,

u.y,v.y,n.y,0,

u.z,v.z,n.z,0,

dot(-u,cameraPosition),dot(-v,cameraPosition),dot(-n,cameraPosition),1);

mat4 modelView = view * model;

float p11=((2.0*near)/(right-left));

float p31=((right+left)/(right-left));

float p22=((2.0*near)/(top-bottom));

float p32=((top+bottom)/(top-bottom));

float p33=-((far+near)/(far-near));

float p43=-((2.0*far*near)/(far-near));

mat4 projection = mat4(p11, 0, 0, 0,

0, p22, 0, 0,

p31, p32, p33, -1,

0, 0, p43, 0);

//lighting calculation

vec4 vertexInEye = modelView * vec4(vPosition, 1.0);

vec4 lightInEye = view * lightPosition;

vec4 normalInEye = normalize(modelView * vec4(vNormal, 0.0));

lPos = lightInEye.xyz;

vPos = vertexInEye.xyz;

vNorm = normalInEye.xyz;

gl_Position = projection * modelView * vec4(vPosition, 1.0);

}

Also, my fragment shader looks something like this. (I just added new material settings as compared to earlier)

#version 400 core

uniform vec4 lightColor;

uniform vec4 diffuseColor;

in vec3 lPos;

in vec3 vPos;

in vec3 vNorm;

void main(){

//copper like material light settings

vec4 ambient = vec4(0.19125, 0.0735, 0.0225, 1.0);

vec4 diff = vec4(0.7038, 0.27048, 0.0828, 1.0);

vec4 spec = vec4(0.256777, 0.137622, 0.086014, 1.0);

vec3 L = normalize (lPos - vPos);

vec3 N = normalize (vNorm);

vec3 Emissive = normalize(-vPos);

vec3 R = reflect(-L, N);

float dotProd = max(dot(R, Emissive), 0.0);

vec4 specColor = lightColor*spec*pow(dotProd,0.1 * 128);

vec4 diffuse = lightColor * diff * (dot(N, L));

gl_FragColor = ambient + diffuse + specColor;

}

And finally the animation I got from updating the code:

As you can see, I am still getting some missing triangles/faces in the morph target animation. Any more suggestions/comments regarding the issue would be really helpful. Thanks again in advance. :)

Update:

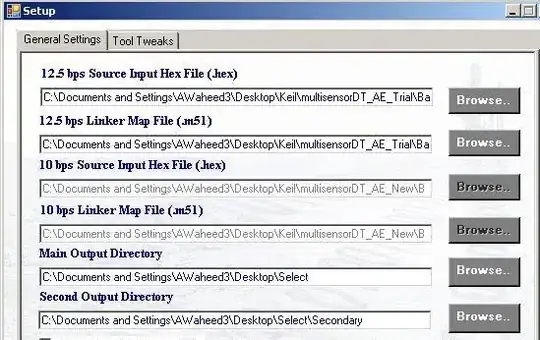

So as suggested, I flipped the normals if dot(vSmile_R, nSmile_R) < 0 and I got the following image result.

Also, instead of getting the normals from the obj files, I tried calculating my own (face and vertex normals) and still I got the same result.